bbudzon

Vectorworks, Inc Employee-

Posts

662 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Articles

Marionette

Store

Everything posted by bbudzon

-

The "Enable Shadows" document preference is now disabled by default because (you'd never believe me when I say this) most people don't notice they are off and the performance increase is astounding. Simply select ROOT and check the "Render Shadows" check box 😉 One last note (kind of tying in to what I said earlier), if you can run in realtime without shadows it will run WAYYYY faster. You can always toggle the shadows on and off in realtime mode to see what you need to see when you need to see it. I would recommend always enabling shadows for Rendered Stills and Rendered Movies.

-

Vision 2019 first impressions and questions

bbudzon replied to Gaspar Potocnik's topic in Vision and Previsualization

I apologize deeply as it was not known that this workflow was so popular. When we redesigned the rendering engine, this was not taken into account and it slipped through testing because we weren't aware it was truly being used in the first place. There was also some confusion in house as to whether or not this workflow was possible in 2018, which it is now obvious that it is. The temporary workaround is to make a small texture that is the color you want, and apply that to the object. What I'd like to get done in the code is the automation of this, although the end result is the same for the user. The real solution involves using actual color, but again, with the way the rendering engine was re-engineered for Quality/Performance, this is no longer an easy task. About the VWX, there actually wasn't one. One of the new features of Vision is the ability to import new formats such as OBJ. The Sponza Model was created by a video game company called Crytek. The light plot was simply copied over from the Vision 2017 Demo.v3s. One cool thing we did to expand upon the textures in the Sponza model was to use a program called CrazyBump. This application takes a simple diffuse texture and does its best to create bump maps/normals maps/specular maps. This will NEVER be as good-looking as a baked model in, say Blender. If you are unfamiliar with the concept, I am only vaguely aware of how it works but let me try to explain. In some 3D modeling program there is a concept called baking. What this means is you create a extremely high poly count model and a fairly low poly count model. You can then feed these two meshes into a function that will bake the high poly model onto the low poly model. The result is normal maps and bump maps (I believe). This allows you to use the low poly model, while retaining VERY high quality renderings. Look at the lion in the sponza model, for example. If you had to guess, you would think it would be a very high poly count model. But if you enter wireframe mode, you would realize that it actually has hardly any polys at all. Not only is this good for performance, but it looks good too! The reason this is possible is due to normal maps (in this instance, no bump is applied). I hope all that makes sense. I'll ping @Edward Joseph and @Mark Eli as they may be able to correct any mistakes I've made. EDIT: I found the link to the original OBJ that we downloaded. Had to crawl the web archives because the site/model has since been updated. Here is a link to the old site/model that we originally downloaded from: https://web.archive.org/web/20180202205437/http://www.crytek.com/cryengine/cryengine3/downloads Here is a link to the new model, if you wish to download it and try to pull it into VW/Vision: https://www.cryengine.com/marketplace/sponza-sample-scene -

Blackmagic Intensity Shuttle

bbudzon replied to Robert Janiak's topic in Vision and Previsualization

Unfortunately, I do not believe this is a UVC device (ie; will not work with generic programs like Skype). Vision requires UVC capture devices. If you can get any additional information about UVC support and supported pixel formats, that will aid us in giving you a for sure answer. FWIW, CITP and NDI are on the wish list 😉 -

Attachments are actually broken in Vision and will take quite some time to fix. They are on the radar, but we need more reports to raise the priority. So for that reason I thank you! The more reports we get the more likely it is that we can solve the problem and the more likely it is that we can gauge our users come time for enhancement requests. I know this is far from ideal, but the closest we can get you to a color scroller is to leverage the extra color slots available in S4. You can adjust each color slot individually from the Properties Window. Yes, I know a S4 doesn't necessarily have this many gobo/color slots but it allows for a little extra flexibility when you run into a bug like this.

-

I'm catching up on this topic, but a lot of the concerns seem to be related to settings and saved views being lost. There also seems to be some commotion about bidirectional communication and/or Vision's Integration into VW. As far as settings and saved views are concerned, the intended 2018 workflow in this forum posts context would be: Prepare VW document Send to Vision for first time ...work... Now you need to update the Vision application with new VW changes without losing settings and views So, save a v3s in Vision This should store your saved views and global settings In VW, export an ESC with only the updated information contained in the export In Vision, delete the geometry/fixtures you are getting ready to update In Vision, merge the esc in to pull in your VW update Verify your saved views and globals in Vision are still in tact If this is not working as described, we can check to make sure bugs aren't already opened and open them. For the bi-directional communication and/or Vision's integration into VW; both of these are things that are discussed regularly in the office. It is an issue we are aware of and one we are hoping to find a solution to. GDTF/MVR may help solve a lot of these issues down the road.

-

Fixtures stop working at certain addresses

bbudzon replied to DBLD's topic in Vision and Previsualization

Thanks Dan! I got your email, downloaded your files from dropbox, and will take a look into the issue! -

Fixtures stop working at certain addresses

bbudzon replied to DBLD's topic in Vision and Previsualization

You can email me directly at bbudzon@vectorworks.net 🙂 Thanks again for your report, gives us a better starting point! Just to reiterate my point, the more details you can give us about this file the better. What fixture was breaking? What was it's unit number? What are some of the DMX Addresses Vision has "burned"? -

Fixtures stop working at certain addresses

bbudzon replied to DBLD's topic in Vision and Previsualization

This is a VERY detailed write up. Thank you very much for you investigations into this issue. We have had similar reports in the past, but never with this level of information. Hopefully with this added knowledge, we'll be able to tackle this issue once and for all. I'd love it if you could post your files and any additional information you may have such as what specific universes/addresses are hosed and the unit number of the fixture your having problems with in your file. It may also be beneficial to contact technical support and have them open a ticket for you if you don't have the ability to create one yourself in JIRA. -

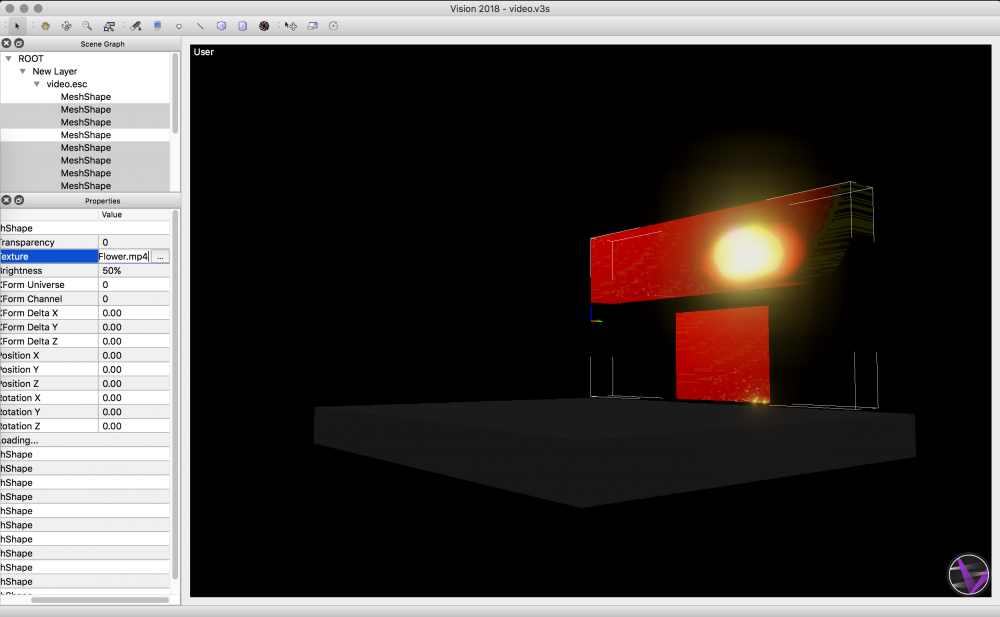

We have posted a Tech Bulletin which should hopefully answer some of you system requirement questions. I'd also refer you to the online documentation for workflow related help. http://www.help.vision.vectorworks.net/ Capture cards are what you want to search for and it all starts by assigning a capture texture to a MeshShape. Hopefully this will get you started. http://app-help.vectorworks.net/2018/Vision/index.htm#t=2018_Vision%2FVision%2FSpecifying_Textures.htm%23XREF_12646_Specifying_Textures&rhsearch=capture card&rhsyns= Lastly, Vision does not currently support CITP, but it has been highly requested feature; along with NDI. The best way to get multiple inputs into Vision is through a multi-input capture card, multiple capture cards, or a single hdmi feed that combines video into a grid form. Once Vision sees this single hdmi grid feed, you can crop it into individual live video streams.

-

Hey Richard, I apologize for the inconvenience. It appears there are some bugs in this code that had not yet been reported. So thank you for your feedback and we will look into resolving this issues asap. The cameras will take a little bit more work on our end to fix than the ambient, but I can at least give you a work around for the ambient in the mean time. It appears ambient is causing issues when it tries to update the Global Properties UI with the new DMX value. What seemed to work for me was selecting anything other than ROOT in the Scene Graph Dock when adjusting the ambient this way. (Since the Global Properties are only visible when ROOT is selected in the Scene Graph, selecting something else so the Globals aren't displayed works around the issue.) Thank you again for running through the software and reporting these issues to us so we can resolve them.

-

Ghosting of hidden or deleted layers in Vision

bbudzon replied to tddesigner's topic in Vision and Previsualization

Good @tddesigner I'm glad this worked for you. My apologies for it being broken in the first place but now we know it is broken so we can fix it! Thanks again for your feedback and let us know if you have any other concerns. -

Ghosting of hidden or deleted layers in Vision

bbudzon replied to tddesigner's topic in Vision and Previsualization

Hey @tddesigner This is definitely a bug. We can fix it for SP4, but we need to get you a work around for the meantime. This bug is related to static vs dynamic caching of geometry. The static cache is broken. One way to resolve your issue is to move the models you want invisible into dynamic cache to work around the issue. To do this, first select the MeshShapes or Layers you'd like to be invisible. Assign an XForm Universe and an XForm Address to these objects. Assign an XForm Delta Y of 5000 (inches) or something very large that will move the objects out of view. Now on your light board, ensure you are sending zero DMX to the XForm Universe and Address. To "hide" the geometry, send a value of 255. This would mimic flying out the set piece on a fly rail. -

This bug is in VW 2018 SP2, not Vision 2018 SP2. We will try to figure out if this broke in a VW SP and why. Fortunately, there is a fairly easy and straight forward work around. Simply don't assign video from VW until this bug is resolved or use an older VW releases. I would recommend using VW 2018 SP2 to export your video screen with static images for the texture, such as color bars or the VW logo. This will bring the screen over as one mesh shape. This mesh shape can have its video assigned once in Vision and things should work fine. I wanted to get you an updated file that had only one mesh shape for the screen, but it's harder to remove a video file attached to a screen that I would have thought. We will discuss this internally and try to make that workflow easier. For this reason, I'd recommend you try to follow my steps above so you don't end up with multiple mesh shapes for your screen in your file. That being said, if you don't mind the extra mesh shapes, I've attached a zip that contains an updated v3s with video working for all mesh shapes. I hope your video screen issues are resolved for the mean time and that you enjoy the new features in Vision 2018 SP2! Video bug.zip

-

Everyone, We have flushed out the implementation and workflow for capture devices. We are in the process of getting a beta together to verify customers are not experiencing issues before we release the Vision 2018 SP2. Hopefully we've gotten this issue resolved and we can get you guys pointed in the right direction! I'm working on getting a Tech Bulletin together to flush out the details. Keep your eyes peeled!

-

All, Keep an eye on this thread: We will be keeping it up to date with the latest information. I would hold off on buying capture cards until we can make some cheaper recommendations (a $3k capture card is unrealistic), but at the very least if you have a capture card already, you can verify that it either does or does not meet the requirements we believe are necessary for capture to work properly.

-

Feature Request: NDI Support

bbudzon replied to Daniel B. Chapman's topic in Vision and Previsualization

@Daniel B. Chapman Keep an eye on this thread: We will be keeping it up to date with the latest information. I would hold off on buying capture cards until we can make some cheaper recommendations (a $3k capture card is unrealistic), but at the very least if you have a capture card already, you can verify that it either does or does not meet the requirements we believe are necessary for capture to work properly. And to address your last post a little more directly, it appears that so long as your capture card supports UVC and YUYV422/YUV420P, then we will use Windows Media Foundation and AV Foundation libraries to get them to work with Vision. -

How to get media server into Vision

bbudzon replied to Anthony Lee's topic in Vision and Previsualization

@Anthony LeeKeep an eye on this thread: We will be keeping it up to date with the latest information. I would hold off on buying capture cards until we can make some cheaper recommendations (a $3k capture card is unrealistic), but at the very least if you have a capture card already, you can verify that it either does or does not meet the requirements we believe are necessary for capture to work properly. -

Hello all, There has been a slew of problems surrounding video capture cards and Vision. A large part of this is due to the number of manufactures that are out there and the number of proprietary drivers required. Some capture cards explicitly state that they only work with certain software suites. We have been doing a lot of investigation into the capture code to figure out why some devices were working and some weren't. As it turns out, this was more a matter of how the operating system handled the capture device rather than how Vision handled it. What we have found, and tested to a limited extent, is the requirements you should look for in a capture card. First and foremost, all capture cards must be supported natively by Window/OSX; meaning no drivers are required (they can be recommended, just not required). The way these devices work is through a universal driver for video capture called UVC. UVC drivers ship with Windows and OSX so again, no installation required; UVC ships with your OS. Lastly, video can be output in several formats; RGB24, RGBA32, ARGB32, ABGRA32, UYVY422, YUYV422, YUV420P, H264, MJPEG, and many more. Currently, Vision only supports YUYV422 and YUV420P. Once we were able to determine what the Operating System's requirements were for video capture cards, we were able to make educated guesses as to which devices may work. We happened to have a AV Bridge MATRIX PRO lying around (http://www.vaddio.com/product/av-bridge-matrix-pro) that we were able to determine supported UVC. We weren't sure what pixel format it was sending out, but we decided to give it a shot anyway. Turns out, it worked!! We will be purchasing more cards over the weekend that we believe meet the Operating System criteria to test them out. Once we have those cards in house, we should be able to determine that most (if not all) UVC compatible / YUVY422 capture devices will work with Vision.

-

This was something that was highly requested and highly considered for our 2018 release. Please stay tuned for updates.

-

Vision Fixture Mode Editor in VW

bbudzon replied to VClaiborne's question in Wishlist - Feature and Content Requests

We definitely need to get a better way to find Vision fixtures in VW. A little developer secret, in 2017 the Inst Type can _sort of_ be used as a search box for populating the fixture mode. In 2018 Beta, this was moved to the Fixture ID field. -

I may be able to clarify some things, if there is any misunderstanding. Universe and Address are fields that, in both VW and Vision, contain plain data. Basically, it is just a number. It was not hard to get both VW and Vision using these numbers, and that is why when you fill out Universe/Address information in VW it exports to Vision as you'd expect (btw, if you want to change what fields in VW map to Vision you can do so in the Spotlight Preferences->Vision Mapping). Gobos and Colors were not so straight forward. While we do want to work toward having one mechanism for Gobos and Colors, we haven't had the opportunity yet. It will take some time for us to get Vision switched over to VW resources. That being said, we have two "sets" of Gobos/Colors resources in VW now that Vision has been integrated. One set is specific to Renderworks; this is what you are likely used to using. But there is also a new set of resources that are specific to Vision. The gobos/colors you have likely always used only effect Renderworks and are specific to VW, meaning they are selected through VW resources. The new "set" of resources/fields/dialogs is the Vision set. If you are running VW 2017 or newer, you can either continue using the old "Edit Vision Data" dialog to set Gobos and Colors in VW and have them export to Vision (although these will not effect Renderworks because they are Vision specific). This is the old workflow that Vision supported back in the Vision2/4 days. Alternatively, we have added a "Color Wheel", "Gobo Wheel", and "Animation Wheel" to the Lighting Devices OIP Edit button. Someone else may need to chime in here, but I believe once you have your fixtures set up in VW with Vision Data and Renderworks Data the way you'd like, you should be able to copy and paste that fixture into new VW documents. Hopefully that will save you the time of having to "redo" all of the same gobos/colors over and over again. The last thing I will say is that any time you can set something for Vision from VW, you should. The reason being is that the communication between the two products is currently one way. This means that the vwx will always be the "master" file. If you keep it up to date, you should be able to get _fairly_ close to the same esc/v3s by exporting it again. Some things, like conventional pan/tilt, will need to be adjusted every time you export from 2017, and that is something we are looking into.

- 9 replies

-

- 1

-

-

- spotlight

- renderworks

-

(and 3 more)

Tagged with:

-

Need help merging .esc file into Vision

bbudzon replied to Cory Pattak's topic in Vision and Previsualization

Just wanted to clarify something Eddy, we changed that grouping behavior. We couldn't come up with a good reason not to export groups, so we changed it to only export items that are marked as Visible in VW. That being said, groups are now exportable which is nice from a VW standpoint, since some people may be prone to group all of the stage geometry together (or perhaps mic geometry and the podium). -

Unfortunately, I do not believe it is possible to update Vision content in VWSpotlight without using the Vision Updater while a dongle is plugged in. Reason being is that the content updates are paid for and we need a way to verify that the person asking for the update has paid. Luckily, Vision does have a dongle system. This allows you to install Vision onto as many machines as you like because, unless the dongle is plugged in, it will not update and will only run in demo mode. My recommendation then for your work would be to install Vision on all of your VW machines and "share" the dongle to get content updates into VW. Again, the main advantage of a dongle is that only one non-demo copy of Vision can be running at any given time. So, we don't have to worry as much about pirating because you own a more "physical" license to our software. Product keys are obviously a different story because they are essentially virtual dongles. Being virtual, simply using one product key on two systems “duplicates” the license and, if we didn’t check for it, would allow you to run two copies with one key. With the dongle (physical license), we don’t mind giving you Vision content for all of your VW machines since the content is useless outside of Vision. One dongle still allows you to only run one copy of Vision, which is our main concern in licensing. Lastly, we do realize this can be improved and we are looking into ways in which we can streamline this process in the future. Hopefully this helps!

-

This would work _most_ of the time. We will have to tweak your seating section a little to meet the requirements of the point I'm trying to make, but it will show you potential problems in only checking the last seat. Imagine a circle going through your last row. Now imagine that that circle _almost_ touches the edge of the bounding box but doesn't actually touch it. What we end up with is essentially one arc segment instead of two. Now whether we check the center point of the seat or all four corners, if we only checked the last one/two seats of that segment, you'd get those middle seats of the back row strutting out of your seating section and then you guys would wonder (as would I if I were you) why we aren't obeying the boundary. One potentially solution involves special casing arcs that are close to an edge so this doesn't happen, but this gets terribly complicated when you introduce holes/convex hulls (think donuts and U shapes, better yet think Swiss Chese lol). There is definitely room for optimization of the checks for inside the boundary, but there are a lot of weird edge/corner cases that force us into choosing a fork in the road. Some people will be happy those seats are strutting out of the seating section because they didn't want two back rows. Others would be happy that it split the back row, because there truly isn't room for those seats on that particular arc (you can always custom place seats by clicking "Edit Seating"). I'm hoping that as we continue working on this and getting feedback from you guys, we will be able to improve the workflow to support all of the cases you guys need, hopefully mitigating some of those "damned if you do damned if you don't" type of bugs. You could absolutely curve that edge so your seats would fit. I would think this would be one of the better solutions. Perhaps the main point I wanted to make, however, was that you can control "orphaned" seats to some extent by using the "Minimum Number of Seats Per Row" limit value. In your example above, you could set minimum seats per row to 6 and the back row (which is probably actually two row segments) should be removed. Unfortunately, this variable is global to all row segments (in curved seating) and it would also remove the first row in your example (all rows with 5 seats or less will not be placed). This was mainly introduced for curved seating as a perfect square with a focus point in the center often ends up with 2 seats in every corner (or something like that). Unfortunately for me, I don't pay much attention to who this tool is for (ok, that's not entirely true!). So, I apologize if accounting for 1000's of seats in a seating section is kind of silly. Figuring out what you guys need is more BrandonSPP's job I get to argue with Brandon about how every possible feature that can be implemented should be supported and he scales me back hahahahh So when I started on all of this I wanted to support S-shaped Swiss-Cheese-Holed Curved seating sections that have been chevroned 15deg and rotated in world view 90deg and are large enough to cover a WalMart parking lot Oh and don't forget alternating row offsets! I want it to do it all, and all at once! But in all seriousness, that's where your guys' feedback comes into play too. If you guys aren't making seating sections that big, we can do things like beef up the algorithms so they are smarter, albeit a little slower.

-

We definitely appreciate the feedback. Just a few thoughts: 1. Curved seating should respect the boundary and this is something we are looking into. In the original implementation, we were worried that performing 4 checks on every seat would be too slow. Especially in very large seating sections that may contain 1000's of seats. Our solution to this was to use the center point of the seat instead, limiting the number of checks per seat to one. Unfortunately, while the center of the seats were guaranteed to be inside the bounding box, the legs of the chairs were not. We had considered putting in a default offset, but I'm not sure this made it into the release. Possibly the most important part of this point is that this bounding box offset can be controlled through the "Allowable Distance Past Boundary" field (which can be positive to push seats out, or negative to pull seats in). Try tweaking this value to get seats closer to/further from the edges of your seating section. Not ideal, but hopefully it will provide you guys with a workaround. We are investigating what would be required to remove this offset entirely and perform a real check on all four corners of the chair, but it may slow the tool down significantly (which I guess is better than never getting the seating section you want!). 2. "Seat Spacing" for curved seating, specifically, is a minimum spacing value, not an absolute value. In our original implementation, the spacing for curved seating was absolute. However, this caused the seating section to not align along the edges. This is mathematically impossible. If all seats are along an arc and they must be spaced exactly the same distance apart, you cannot guarantee they will align on an edge. To make matters worse, because each arc has a different radius, each row will align to each edge differently. This made it almost impossible to get a "pretty" seating section. So basically, in order to make the seating section look symmetrical, we HAD to treat this as a minimum value and not an absolute value. We could look into adding absolute value spacing to the curved seating, but if you guys saw what we did during development, you'd never want to use it It was pretty ugly... Much prettier when it aligns along those edges for you. 3. The reason the last row shows a gap in the middle is because of the check that occurs when placing a seat. In the current implementation, if the center of the seat is not in the bounding box, it simply isn't placed. In this case, almost all of the seats in the last row fit inside the bounding box, but the few in the middle didn't. There are two ways to address this. The most obvious solution is to reshape your bounding box, dragging the left edge a few inches, so these seats can now pass the check and fit inside the poly. The other option is to use the "Allowable Distance Past Boundary" that I specified above. The downside to this method is that it applies to all edges. The reshape will only add more seats on the edge you extended. 4. This is definitely appears to be a bug. We will look into getting a fix together to address the issue.