-

Posts

400 -

Joined

-

Last visited

Reputation

61 ExcellentPersonal Information

-

Occupation

Set & Lighting Designer

-

Homepage

www.youtube.com/Semaj000

-

Hobbies

Innovative Technology & Creative Design

-

Location

Australia

Recent Profile Visitors

2,744 profile views

-

In Stable Diffusion, negative prompts are used to guide the model away from generating certain elements in the image. The prompt should describe the unwanted elements directly without using negations like “no.” When crafting a negative prompt, you should directly describe the elements you want to avoid. For your example, if you don’t want an image with bright colors, the negative prompt should be “bright colors” as the element to avoid. Note: It feels horrible for me as an Australian to use Colors over Colours however slightly more of the SD datasets acknowledge American English... As a more detailed example if your objective was to make; an image of a cozy vintage cafe with wooden furniture, dim lighting, and a warm atmosphere AND to avoid any modern elements, bright colors, and people. Positive Prompt Input Field: “A cozy vintage cafe with wooden furniture, dim lighting, and a warm, inviting atmosphere. The cafe has antique decorations, old bookshelves, and (a small fireplace).” *Note: In the examples below the (a small fireplace) was incremented for the second image as another test. Negative Prompt Input Field: “Bright colors, modern elements, people, electronic devices, neon signs, plastic furniture” Happy generating! J

-

@zoomer negative prompts have an equal weighting as positive prompts; and as such we need to be super careful with blanketed negative prompting. For example the terms 'gross proportions' and 'ugly' are not terms which should be baked into the negative steering of a Model or Checkpoint. Imagine you're doing a Tim Burton, Guillermo del Toro or Wes Anderson inspired piece, the characters and theme you're aiming for are inherently 'grossly proportioned' and, to some, 'ugly'. I agree with @Luis M Ruiz that suggesting these prompts, for the most part, is helpful for those learning AI Visualisation and as GUI feature overall - however I would not like to see these baked into the Model or Checkpoint in any way as I think it would immediately set a Model Bias which would limit creativity.

-

I think much of this relies heavily on the success of Apple Vision Pro and if they're using the same Apple RoomPlan technology for elements of it's mapping (which I suspect they're not an it's just active point cloud lidar for surface and object detection). Realistically until Apple RoomPlan update for uneven surfaces, curved walls, stairs, columns, raked floors and many other things I don't see why/how Nomad would be able to do anything further with this feature - however I'm happy to be proven wrong! 😛

-

I personally think that using generic bulk negative prompts is problematic when working with text to image machine learning models. I've seen a lot of people these days who just leave their negative prompt as "bad anatomy, deformed hands, deformed face, ugly hair", eventually filling their prompt cap with such phrases. The biggest issue with this is how the machine learning treats the assessment of the negative phrases. For example "deformed hands" although it will overall reduce the finger deformity in hands, it will also generate images which have far less emphasis on "hands" overall, leading to people with their arms behind backs or out of frame. A better case would be to, when refining the image, use positive phrases like "detailed hands" or "detailed hair" which steers the model towards a positive and detailed emphasis on these phrases. If you've looked at a few of my previous posts on the topic of the current implementation of Stable Diffusion into VW2024 the above methodology, and prompts in general, can only go hand-in-hand with better control over the Stable Diffusion Generation - including primarily the Sampling Steps (crucial for a prompt like "detailed hair" as sampling steps <20 won't even get to hair detailing) and InPainting (the process of masking just an area of an image for generative change - for example you could mask just the hand of a person and then run 20 Batched Images over just those hands at a low "Creativity" / CFG and choose the best fit). Stable Diffusion (Same engine as VW, Automatic1111 Interface) Below is an example of a generated human on the right (text to image creation), and then shown on the left is the InPainting region (black) - noting this is Stable Diffusion XL in the Automatic1111 container. The goal of this example is to show the ease in which InPainting can correct fingers (or other elements). (Left: InPainting Mask, Right: Chosen Iteration of Human Figure) The video below shows 50 example hand replacements. Each generation took around 7 seconds on a local machine to make. Hands Example.mp4 50 Images created in 1 Batch for hand replacement, 40 Sample Steps with 7 CFG, 0.4 Denoising Strength (Creativity). Overall, in my experience, prompts (both positive and negative) should be applied on a per image / intention basis, as starting with a bulk prompt eliminates huge portions of the dataset overall. Or as @Luis M Ruiz rightly stated;

-

@zoomer, sorry I should have been slightly more clear. The list above is the capabilities of Apple RoomPlan. I'm unsure if they're all implemented into Nomad - that would require someone from the Dev team to probably chip in. I more posted the list so people could make a clear assessment of, for example, "Will Nomad know what a Car is if I scan it"; as indicated by the list above, Apple RoomPlan doesn't know what a car is, therefore it's safe to say Nomad won't know what a car is. Again, Dev team might be able to shed more light on this - and more importantly, I'd like to know the future of this app in terms of active development.

-

Ahoy, Having worked with Apple RoomPlan on other projects for a little while now, I'm fairly confident that the Nomad Scan is a refined/polished version of the full base code and sample available - as in my testing sporadically over the last few years seem to correlate and @techdef I echo the sentiments on the lack of Stairs and Multi-Level surfaces. For those of you interested in the Apple RoomPlan capabilities it's available here ( https://developer.apple.com/documentation/roomplan/ ) but as a short list, here's the available room details; Inspecting room details The story, floor number, or level on which the captured room resides within a larger structure. An array of floors that the framework identifies during a scan. A 2D area in a room that the framework identifies as a surface. An array of doors that the framework identifies during a scan. An array of objects that the framework identifies during a scan. A 3D area in a room that the framework identifies as an object. An array of openings that the framework identifies during a scan. An array of walls that the framework identifies during a scan. An array of windows that the framework identifies during a scan. One or more room types that the framework observes in the room. And the available object detection categories; Determining the object category A category for an object that represents a bathtub. A category for an object that represents a bed. A category for an object that represents a chair. A category for an object that represents a dishwasher. A category for an object that represents a fireplace. A category for an object that represents an oven. A category for an object that represents a refrigerator. A category for an object that represents a sink. A category for an object that represents a sofa. A category for an object that represents stairs. A category for an object that represents a storage area. A category for an object that represents a stove. A category for an object that represents a table. A category for an object that represents a television. A category for an object that represents a toilet. A category for an object that represents a clothes washer or dryer. I think with these two lists in hand you can see exactly where the capabilities are - at least in my current usage and understanding of both Nomad and Apple RoomPlan. Happy scanning! J

-

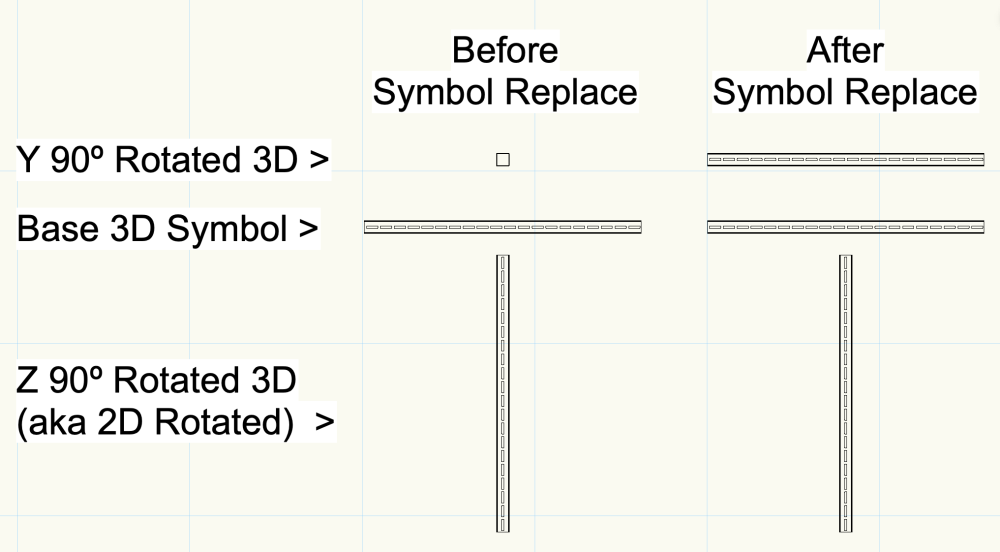

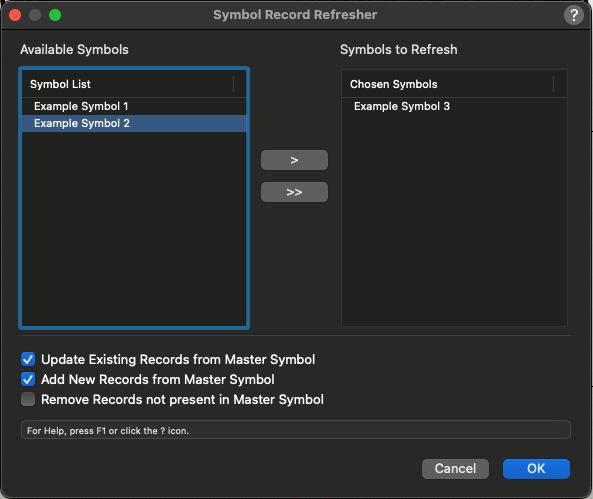

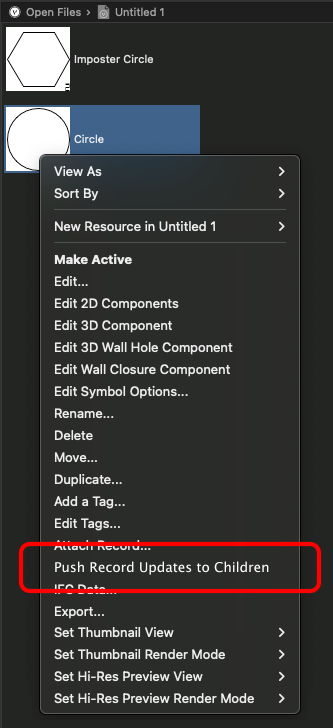

Ahoy all, Current Methodology I'm looking for other user's feedback on a methodology for Record 'Re-issuing' from the master symbol to symbols in the drawing. We know the following; Records can be attached within the symbol by selecting nothing within the edit mode of the symbol and then choosing 'Attach Record' from the POI, or via Resource Browser Right-Click Context menu (or one of about three other methods of choice). Symbols inserted after this point will automatically have the record placed upon the external container and available in the POI Data Tab. If the record field data is changed at a later stage in the Symbol Master it will not be forced to Children Symbols already present in the drawing (Agree with the methodology here 100%). The current best ways to retrospectively update Symbols already in the drawing would be; Create a worksheet summarising the desired symbols and change data manually, or; Use Modify > Convert > Replace with Symbol... and choose "Use records of substitute symbols" Although tedious these options are plausible, however not really effective on mass. The Issue The bigger issue for a client of mine currently is that the 'Replace with Symbol' method is only effective for 2D Symbols (including Hybrid) not 3D Only Symbols. When attempting Replace with Symbol in a 3D View the command is unavailable (fine). When replacing a 3D Symbol, with the same Symbol (with the intent of Record Replacement Only) the rotation is only maintained for the 3D Symbol's Z Rotation. Image showing that the Z Rotation is maintained (like a 2D Symbol) but not X/Y Rotation Although it could be argued that the Worksheet change methodology could perform this operation, this is true however taking a broader step to Record Addition, a Worksheet is unable to ADD records to a Symbol where as the 'Replace with Symbol' command can. Additionally, typically the 'Replace with Symbol' is performed in conjunction with a Group Selection (either manually, by Select Similar, or by Custom Selection) however this is sometimes not practical - especially if the desired Symbol is within another Symbol. Supporting Issues I've seen quite a few discussions on the record management from base symbol topic and think it requires an additional menu command to be considered; Proposed Solution Presuming that I'm not missing another working solution, noting that OBCD is possible but tedious (from experience) I would propose the following two options - however welcome feedback on options. Creation of a menu command which opens a modal dialog listing all Symbols in the document. An example / proposed dialog is below; A right click context menu from the Resource Browser allowing for easy single Symbol (and subsequent record) updates to instances throughout the document. However, I'm just one user's perspective from many. If you've got a different method that I've missed amongst the Data Manager or Record Commands I'd love to hear them. Then I guess I'll move this topic to the Wishlist... Cheers, J

-

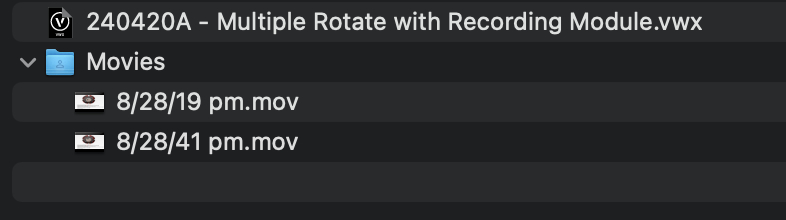

@DCLD are you a Windows user per chance? I've just checked my Windows system and of course file structures are 'Movies/' on Mac and 'Movies\' on Windows - I should have known. I've just uploaded a new version which saves the output files directly wherever the current files is saved. 240421 - Multiple Rotate with Recording Module.vwx Or if you want to you could change the line; On Mac: T := Concat(T,'Movies/',Date(2,2),'.mov'); On Windows: T := Concat(T,'Movies\',Date(2,2),'.mov'); I'll have a dig tonight and find a check for the platform of choice as it would be nice to keep the folder structuring (and also be able to Make Folder depending on if the Movies Folder is present or not... but that's for later! Happy rendering, and let me know if that solves it on your system! Cheers, J

-

DCLD started following James Russell

-

@DCLD you have summoned me - well played. A few months ago I had the time to sit and finally crack the QuickTime Recording using Vectorscript, as most probably used in Animation Works, Solar Animation and Animation Pathing. Although I'm now using it for several more advanced animation and pathing tools in Vectorworks which are not yet released, I thought this particular thread deserved an update. @michaelk I've added to your (not so at all) badly coded rehash, and over-skinned my current/draft QuickTime code. Please find the attached file - however keep the following in mind; Combined Concept of Michael Klaers and James Russell 240420A - Multiple Rotate with Recording Module.vwx 1. Ensure this file is saved somewhere. Wherever you save it also make a 'Movies' folder. 2. Find the Script Pallet under Window > Script Pallets > Adventures in Spinning is open. 3. Double Click "Multi Spin w/ Record" to start. 4. Videos will be output to the Movies folder in the same location as the file. 5. Movie setting are hard coded (25FPS #WelcometoPALAustralia), however can be unlocked by changing QTSetMovieOptions(movieRef, 25, 10, false, false); to QTSetMovieOptions(movieRef, 25, 10, true, false); 6. Enjoy this concept file. Example Renders Below; Core Example OpenGL Half Speed.mov Core Example Concept File Core Example.mov Core Example (IsoMetric Clear) Example Hidden Line Full Speed.mov Hidden Line User Example (Thanks @Peter Neufeld. for a cool file!) Example OpenGL Full Speed.mov OpenGL User Example (Full Speed) Example OpenGL 10th Speed.mov OpenGL User Example (10th Speed - Better Lighting) As much of this thread alludes to there's many better ways to do this in actuality, my most common one being TwinMotion and translators these days. But in terms of seeing something cool through to an endpoint this is it. Enjoy! J

-

AI BIM?

James Russell replied to John S. Hansen's question in Wishlist - Feature and Content Requests

Negative - I actually wrote it after visiting the Henry Ford museum in Nov '23, probably why it was still fresh in my mind as a great case example for workplace and sector innovation, with similar role replacement implications due to technology and mechanical advancement. It could be considered factually incorrect in it's wording as technically the Ford motor company invented the Moving Assembly Line which was my intentional reference here if that's what you're implying... Edit: Somewhat ironically this post additionally is a great point of a Reverse Turing Test where a human answer is believed to be AI - but again that's more of a philosophical discussion, perhaps better suited elsewhere! -

AI BIM?

James Russell replied to John S. Hansen's question in Wishlist - Feature and Content Requests

Sorry in advance for a deeper discussion than originally proposed but I think this is a really interesting and bold topic - and more over one that so many fields are encountering at the moment; ChatGTP and other language based models are redefining the roles several fields; Translators and Interpreters, Customer Support Representatives, Data Entry Clerks, Content Writers, Legal Assistants, Medical Transcriptionists, Journalists, Administrative Assistants, Researchers, Tutors and Educators Stable Diffusion, Dall-E, Midjourney, Adobe Firefly and other image based models are also redefining the roles of several fields too; Graphic Designers, Photographers, Fashion Designers, Architects, Interior Designers, Storyboard Artists, Product Photographers, Cartoonists and Animators, Medical Illustrators, Marketing and Advertising Designers ...and what's even worse is I just used ChatGTP4.0 to make those lists ranked by statistical data as the top 10 anticipated replacements by AI type... I think we're going through a very broad, unknowingly drawn-out Industrial Revolution - a Cognitive Computing Revolution if you will, however I think it's also a matter of perspective. Henry Ford's invention of the Assembly Line significantly affected manufacturing sector, or jump back to the 15th century and have a look at the Johannes Gutenberg printing press and the impact this had on literature, education and culture. There's no putting the 'Genie back in the bottle' for any of this - the question is now that AI has passed Turing Test; when the AI can actually design something indistinguishable, or better than a Human / Designer / Architect / Biologist / Mathematician - what are we actually meant to do? Now coming back to BIM instead of a deep Philosophy session... I personally believe that AI is unlikely to take over roles the BIM Drafting sector specifically in the next five years. I think that the nuance and attention to detail of specifically required for BIM Drafting, but also for the roles in which Vectorworks specifically is targeted, are something which both won't be a currently targeted sector, and/or the cost vs. benefit analysis for training AI specifically for that sector vs. the error corrections required in its infancy would be drastically outweighed. I do think AI will be used as a presentation tool. I do think AI will be used for inspiration. I do think AI will be used for filling gaps in our tedious work (creating textures, creating rough surrounding buildings, extrapolating topographical data, material data sheets, fact checking, material analysis). I, finally, believe that several governing bodies will establish a rebuttal before this affects job integrity in the BIM Drafting field (and extended higher design fields), setting a universal precedent - similar to the actions taken during the 2023 Writers Guild of America Strike. ... who knows in 6 Years though... 🤪 -

Flair-Studio started following James Russell

-

Todd McCurdy started following James Russell

-

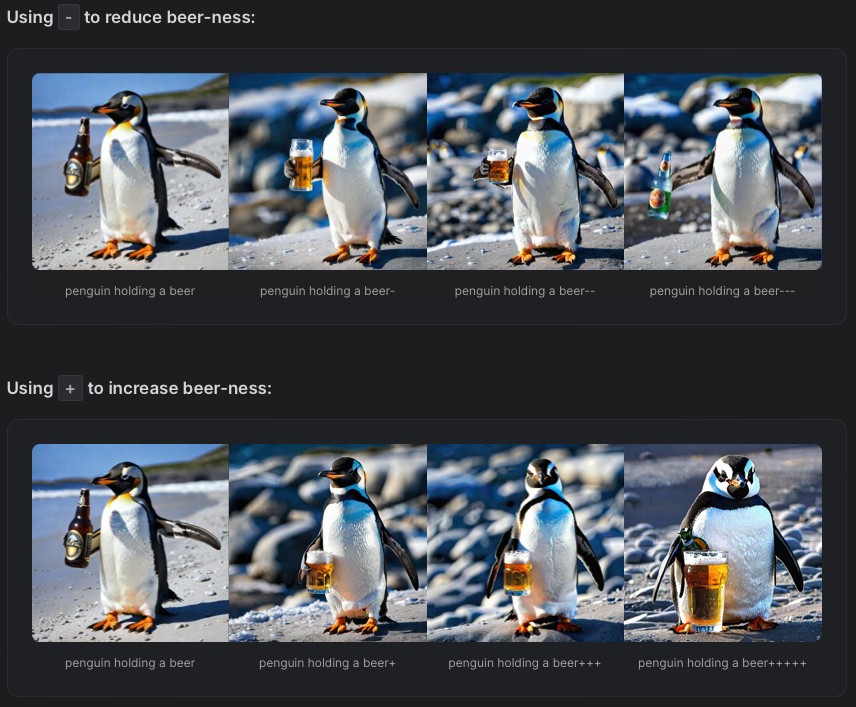

@Dave Donley certainly! When using SD (or other AI Image Generators; Midjourney, DALL-E, etc) for extended periods of time you'll realise that although the sliders are good, it's actually the prompts (both positive and negative) which are better at 'steering' the direction of an image. I think in my mind 'Prompt Assistance and Understanding' might have been a better way of writing my dot-point, and it comes in two parts; Parameter Weighting Understanding For those of you newer to the AI world, the Prompt essentially steers your image generation (combined with the Seed Number for variance) over several iterations through the magical web of possibility until you reach your destination image. Weighting is the concept of how much impact a particular word(s) has within the phrase. I would suggest reading this nice snippet / overview - however @Dave Donley you and the team would have to confirm that all of this syntax is accepted by VW AI Viz: https://getimg.ai/guides/guide-to-stable-diffusion-prompt-weights Within the provided VW Documentation (https://app-help.vectorworks.net/2024/eng/VW2024_Guide/Rendering2/Generating_AI_images.htm?rhmapfs=true), it would seem the GUI sees () and [] as increase and decrease in prompting respectively; for those of you used to SD (in particular Automatic1111) this is the common syntax. Guide Snippet (To be confirmed with VW): single words without parentheses: penguin holding a beer+ single or multiple words with parentheses: penguin holding a (beer)+ penguin holding a (beer)- penguin (holding a beer)+ penguin (holding a beer)- more symbols result in more effect: penguin (holding a beer)+++ penguin (holding a beer)+++ nesting: penguin (holding a beer+)++ --> beer effectively gets +++ all of the above with numbers: penguin holding (a beer)1.1 penguin (holding (a beer)1.3)1.1 Weighting with numbers + is equivalent to 1.1 ; ++ is equivalent to 1.1^2 ; +++ is equivalent to 1.1^3 etc. - is equivalent to 0.9 ; -- is equivalent to 0.9^2 ; --- is equivalent to 0.9^3 etc. As you can see, with the same Seed Value, the weighting of particular word(s) [in this case beer] can change things. In a practical example, which I'll need to test within VW AI Viz, this could be the difference between; rock band on stage, bright red shiny drum kit, male lead singer with long black hair and black vest, blue lighting, brick back wall AND (rock band) on stage, (bright red) (shiny)++ drum kit, (male lead singer) with ((long) black hair) and (black vest), blue lighting, [brick back wall] The best implementation I've seen of this was a plugin (I'll go digging later) which simply coloured more positive words / phrases in deeper shades of blue, and more negative in red, which visually helped people understand the weighting so much more! Prompt Assistance (including Styles) Over time users will find series of words which help 'steer' an AI Image Generator toward their result, which could develop into a style. Without wanting to re-train and/or checkpoint (really time consuming ways of making an AI do the particular thing you want), it's often better to find your descriptive style and then vary from there. When looking for inspiration try sites like; https://lexica.art https://prompthero.com/ These sites allow you to search for something basic, for example 'stage', and then visually find something which matches your requirements - allowing you to see the word associations with those images. So for example searching for 'stage', I might find an image that I like the look of, and from there a portion of or whole prompt of; image of a retro stage, full view of the stage, red curtains on the sides, with a slight shadow,stage lights, a soft image, a winding path of strings and notes, men dancing in the middle of the stage, women dancing in dress style,dancing on stage, orchestra backstage, ((best quality) ), ((masterpiece) ), ((realistic) ),(detailed) , creative, poster style, highly detailed realism, 4k Over time I might find myself constantly using "((best quality) , ((masterpiece)), ((realistic)), (detailed), creative, poster style, highly detailed realism, 4k" and if I'm using this phrase all the time I might like to consider this a 'Style', and save it as such: 'Custom Style - Realistic' - very much like our Renderworks Styles and Sketch Styles. So instead of writing these words every time I can just add my 'Custom Style - Realistic' and these words are added to my prompt. There's been some amazing GUIs over the last few years which have prompted users who start looking for additional words, for example if a user typed the word 'Realistic', the prompter will then suggest; masterpiece, best quality, detailed, high realism, 4k, etc I think implementing Styles or Prompt Suggestions could be something to look at for the future, in particular as this technology is introduced to newer users - essentially an automatic version of the overview provided in the "Tips for writing a good prompt" section of your existing documentation. Happy generating everyone! Cheers, James

-

Hi All, Firstly, congratulations to the Vectorworks Development team - this is a bold step and a necessary implementation to remain competitive within the market - and to simplify what some of us were turning to other applications (SD Standalone, Photoshop, etc) for in the interim. Initial implementation, in my opinion, is great. Updated VW2024.4, Updated VSC, Pulled 'AI Visualizer' into the workspace and generating images in no time. Coming from a background of using Stable Diffusion 1.4 (2022) to XL1.0 I'd like to put the following items up for discussion and implementation - primarily to bring the usability of this feature inline with longterm SD user expectations; Saving and File Names Organisation of files is one of the baseline features of SD iterations. As such the following could be considered; Option to Automatically Save All Iterations to Folder. Option to Automatically Create a Session Folder. Option to Auto Name files with; Incremental Number Datestamp / Timestamp Shorthand Description (Initial 32 / 64 Characters of Description Field) Seed Number Option to Save Upscaled Versions to Separate Folder Option for Text file to be included with; Associated Incremental Number (ID) Within text file include full details of generation; Positive Descriptions, Negative Descriptions, Seed Number, Image Resolution, Number of Steps Super important when leaving a project for a time, then returning and trying to remember prompts for a certain image / result / seed. Multiple Iterations (Batch) Combined with MultiSave (above) multiple iterations is one of the biggest creative boosts I've personally found over the few years of SD use - to be able to generate 8 / 16 / 64 versions of something and choose the best one. Multiple Iterations - allows multiple images to be generated (1 - 64) and then once a desired seed is found tweeks to prompts can be made. Batch Time Saving - I'd be quite keen to finalise a viewport / image in VW and then set a batch of 16 iterations going to give me options. Grid Return - natively SD support a grid summary of results with individual outputs to follow - suggest implementing. Iteration Slider Controls Although the creativity slider is good, and is a simplification of the multitude of variance in SD it would be good to see some of the following in an 'Advanced' dropdown; Seed Number should be shown for resuming previous sessions (presumably this is what the Generate Similar actually refers to) For new users the Creativity Slider should indicate 'More Creativity' direction (hover text same as the CFG slider native to SD for reference). Number of steps - combined with iteration steps becomes and important element in 'steering' your desired results. I'll often produce 64 results at 25 Steps, followed by the best of those 24 x 40 Steps and then the best of those 12 x 60 Steps. In Fill / In Painting Very sure this must be in the pipeline however worth mentioning. Marquee Selection Infill - a logical step to be able to select a particular element and infill. Rectangle, circle and lasso marquee and mask painting overlay would be preferred. Inverse Selection Infill - useful for keeping a central element (for example an outdoor stage) and then changing the surrounding elements (sky / weather / season) Bleed / Blur / Feathering - the 'hardness' of the marquee selection and resultant Infill / Outfill. Miscellaneous Items Face Restoration - could very well be on by default. Would advise allowing for pre- and post- images to be saved independently for comparison. Tilling - would suggest looking at this checkbox / plugin, mainly for iterative texture creation (one of the biggest things I currently use SD for) Auto Prompting - at a GUI level it would eventually be good to see this, in a similar way to Styled Prompts in SD Standalone / Photoshop. PNG Transparency - often achieved with the Background Remover Plugin, this is the best way to create Image Props (currently how I do them with SD) I'm sure that some / most / all of these above is in the pipeline and completely consider the current release as a taste of what's to come - excellent for an introduction to many in the community. Raw image - Palais Theatre (Melbourne) Concept Generation - RAW AI VW Concept Generation - RAW AI VW Concept Generation - RAW AI VW Congratulation to the team again on this. Cheers, James

-

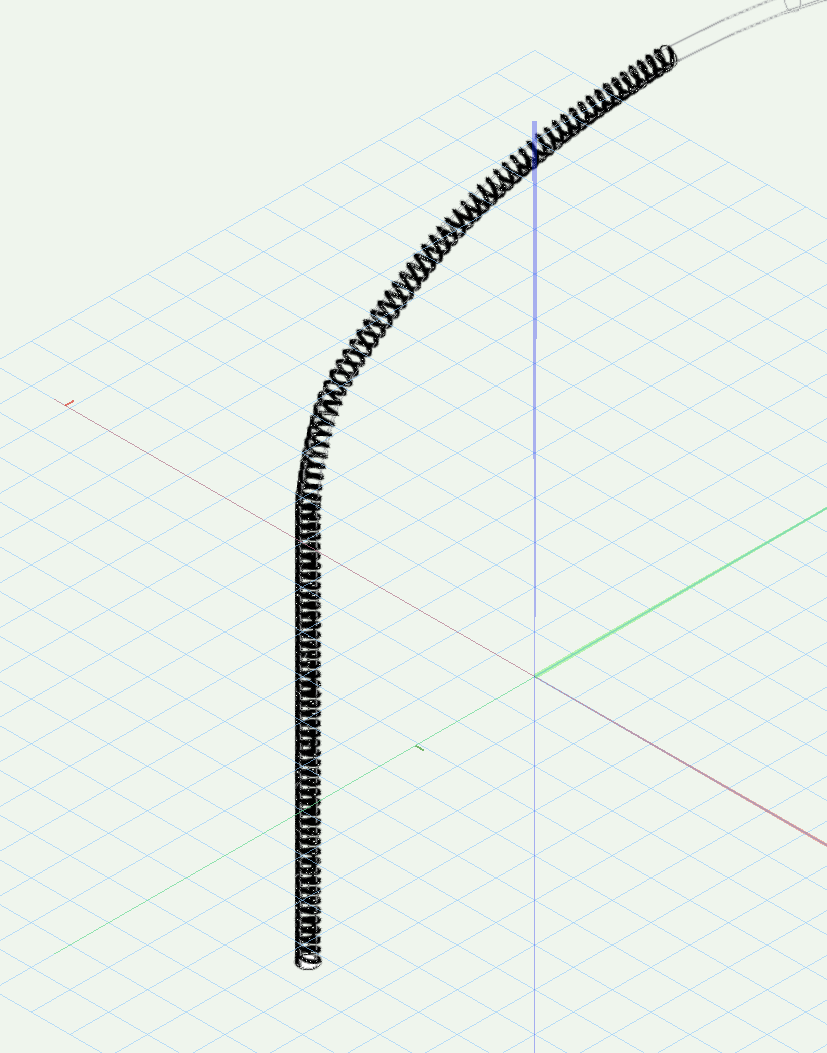

Twisted Extrude Along Path - Challenging Columns

James Russell replied to James Russell's topic in General Discussion

@VIRTUALENVIRONS, I did encounter the same two issues you mentioned; Both the Dialog at creation and the Object Info Panel seemed to max out the number of turns / pitch (both around the same relative value). Ideally, as shown in the reference image, I would have liked a pitch of around 70º but could only achieve something closer to 50º with my variables. I ended up having to duplicate my path to create a second helix 180º out of phase - but would still like to know why I couldn't get the pitch/turns much much tighter. I found great issues trying to do the EAP with a Locked Profile Plane, which in your example would have the flat of the Profile Object maintained perpendicular to the Cylinder. I opted for an octagon for my extrusion (compromising for roundness vs polygon count) but would still like to know why the square I originally tried both failed multiple times and/or took incredibly long to generate. Very nice result though in your example image - well done! -

Twisted Extrude Along Path - Challenging Columns

James Russell replied to James Russell's topic in General Discussion

Hi @Tom W., What an excellent suggestion as I'd never considered the Helix Spiral beyond a straight vector before! I'll leave this post open for a little longer incase there's other crafty critters out there with alternatives... in the name of science that is! Cheers, J

.thumb.jpg.022f40ad6431e127fc59d16c0e53ac3c.jpg)