-

Posts

12,808 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Articles

Marionette

Store

Posts posted by PVA - Admin

-

-

On 1/22/2019 at 2:24 PM, David S said:

The single biggest (internal) benchmark is very simple: does this release make my client's workflow easier and quicker, and how?

Unfortunately, that's the exact opposite of a benchmark. It is the subjective experience one has when using software, as most workflows of our users are not something that is standardized and comparable to most others. It is the MOST important metric in my opinion as well, but it is so personalized that it has to be judged in a much more human way than something like objectively faster rendering speeds and load times.

On 1/22/2019 at 9:00 PM, jnr said:I would offer that at least for architects, two benchmarks might be to measure say a typical residential project as well as a multi-story commercial building using NNA templates, complete with NNA standard PIO's etc. two projects that might be typical (solicit users? have a competition?) that can then be used to measure versions as time goes on.

This is kind of the problem I'm getting at, if we start dictating what a typical residential or commercial project is, sure that's possible. The fact that we DON'T dictate what is typical and what is out-of-spec or beyond design intent is the key issue. I can pop out files that *I* consider standard, sure but a lot of the time when getting feedback it becomes "Well obviously you would use Floors and Never Slabs" or "No one uses tool X everyone uses Y" followed shortly by "Thats preposterous, Y is used by everyone I know and their dog, X is unheard of and used only in Lichtenstein." This is of course an over dramatic comparison, but I am still finishing the first cup of coffee. The key thing I want to convey is: I WANT to provide metrics and benchmarks and version comparisons, it is merely deciding the proper way to do so that can be conveyed in a meaningful way.

On 1/23/2019 at 7:18 AM, M5d said:I'm unsure what the jump-cut from the previous thread's question, to this thread's answer is intended to convey Jim?

Because they are two logically separate topics, one asking for official benchmarks on speed (objective) and one concerned with a perceived decline in speed across versions (subjective). There's no way I could possibly carry on both conversations in a single thread without it getting out of control.

On 1/23/2019 at 7:18 AM, M5d said:Also, I have to say, the logic of deliberately avoiding benchmarks that include the apparent, problematic, operations and calculations etc., until they're no longer problematic, is truly inspired. Is that a standard operating procedure (S.O.P) at N.A.?

I posted a question asking specifically for what kind of things users wanted to see in benchmarks and that I see the value of them, how did you arrive at the conclusion that I/we wanted the opposite of that?

Though to be clear; regardless of how frustrated you may be, the passive aggressive tone that has so permeated our media and politics will not be accepted here:

On 1/23/2019 at 7:18 AM, M5d said:To "clarify" Jim, is this statement intended to address the "user experience" that was raised in the previous thread?

I need you to dial it back a bit. In any case, us ignoring a problem like that isn't possible, you all could easily just post benchmarks refuting any false claims we made or lack of claims we made.

I'm not trying to NOT show benchmarks, I'm trying to ONLY show benchmarks that are:

1) Factual (And not just in ideal conditions)

2) Beneficial to a majority of users

3) Not lying by omission (Looking at those ever-changing focus metrics Apple uses at their keynotes)4) Useful in purchasing decisions

It is no secret at ALL that geometry calculation speeds have not changed between versions. This is because of that single thread Core geometry engine I've discussed here so often. That issue pretty much just translates into a flat line chart however, not one that is increasing or decreasing. You're pretty much going to get the same time results across versions for things like duplicating an array of cubes or importing DWGs with a set number of polygons. If those kinds of charts are what people really want, sure I'll post them, but I don't think they help OR hurt. The problem with geometry calc slowness is known and talking more about it or filing more requests related to it will not change it any faster. It is already being worked on. If it was possible to make that go faster, I'd be doing whatever made that happen instead of writing this post.

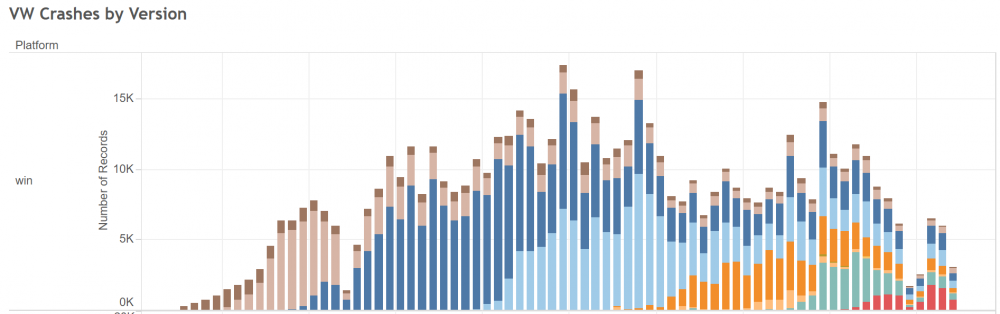

I normally don't share things like this because of their complex nature, but heres an idea of what's going on from an analytical side. Speed is VERY hard to pull anonymous metrics on, but Vectorworks crashes are quite trackable:

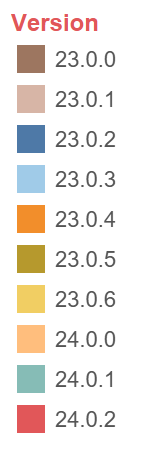

The color key for the above chart:

From left to right, the weekly number of crashes we get from the various versions of Vectorworks. I began this filtered chart at the launch of 2018 SP0. The big dark and light blue bits in the center are Vectorworks 2018 SP2 and Vectorworks 2018 SP3. Versions are stacked from top to bottom on these charts, the top being the oldest version included and the bottom being the latest. By far, the most unstable versions of Vectorworks were the middle of 2018's life cycle. The small green and red bits on the bottom right are 2019 SP1 and SP2. Crashing has reduced SIGNIFICANTLY in that time, yet we still have reports thinking that 2019 is more unstable than past versions.

This is because these simple metrics aren't enough to include everything there is to the experience of working with a set of tools like ours. Its all too easy to discount claims of slowness or instability with metrics like the one above, but we choose not hide behind metrics like this because we know it can't possibly tell the whole story and because we use it ourselves and see that what you all are saying FEELS true.

We will be sharing more metrics in the future. We will be making more benchmark-style reports available in the future. If you all can provide me with specific objective comparisons you would like to see, I will provide them. That's the point of this thread.-

2

2

-

-

I am unable to replicate this here, get in touch directly with tech@vectorworks.net, they will likely request this from your machine:

-

21 minutes ago, Denise said:

the background has gone green.

You may have accidentally moved to the 3D view. Go to View > Standard Views and select Top/PLan. It might currently be on Top. -

I need the system profile from that machine please, the above link will get you the steps needed and you should be able to drag/drop it here as an attachment.

-

Once you get the chain and snaps made though, this tool may become useful to you:

http://app-help.vectorworks.net/2019/eng/index.htm#t=VW2019_Guide%2FAnnotation%2FCreating_Repetitive_Unit_Details.htm-

1

1

-

-

I mean draw the 3D loci afterwards, separately from the extrusion.

I recommend selecting one link and one 3d loci, then using Right Click > Align/Distribute > Center followed by Right Click > Align/Distribute > Center. That should give you a perfectly centered snapping point. Then either duplicate that snap point and move it in 3D via Modify > Move the desired amount relative to the overall dimensions of the object and where you want the snap points to be and then repeat that operation duplicating the center snap loci each time you need to offset a new one.

You should only need to do this to one link, then just select that link and its needed loci and duplicate/rotate that into place, then save the two links and their identical snap points as a repeated symbol.-

1

1

-

-

10 minutes ago, line-weight said:

I wonder to what extent this approach is sustainable if we are trying to move towards BIM and generally working much more in 3d. Don't get me wrong, I'm quite keen on the principle of not being constrained to one way of doing things, but compared to doing 2d linework (which is what VW excelled in for some years), constructing a workable 3d model and generating drawings from it is a pretty complicated process.

This very question keeps many of us up at night. It's a big one. The fact that we also cater to many different industries that use varying levels of information inclusion in their models (For instance, the Entertainment folk have effectively been doing their version of BIM for quite awhile, and certain aspect of Site Design have been data driven since I started here.) and the right answer for one is not always the right answer for all of them.

I am very glad for once that the method in which we handle this is above my pay grade, its an industry-wide big ticket question in a lot of ways.-

1

1

-

-

I think the quickest way would be to add 3D Loci objects at the points you want to be snappable, then with the link geometry and the 3D loci selected, create a symbol. Most likely with the symbol's insertion point being aligned one of the snappable points. That should let you both insert it at one of the snap points if desired, in addition to keeping file size down and letting you mod all the chain links at once later if need be.

-

1

1

-

-

Did you register with a school in one country but choose your location as being in another? That may have gotten it stuck, but if you emailed your local support however they should be able to correct it, I do not have admin control over that region.

-

This came up recently in another thread, but I think it merits it's own discussion:

20 hours ago, P Retondo said:Jim, VW needs to deal head on with these speed and efficiency perceptions / reality (?) by instituting performance testing and releasing the data. When I buy a processor I look at all the available data, and it is both voluminous and convincing. CAD programs need to do the same thing - if for no other reason than to let their engineers know whether they are doing a good job. When I make the time-consuming commitment to convert my files and resources to a new version, I want to know if my performance is going to be at least equal to the previous version. That's just a simple business decision, and I don't base those on sales department press releases.

I want to do this. Some of our distributors have directly asked us for something similar. The key difficulty I'm running into is: I have yet to find a way to even come close to showing what a "standard" file is. It cant be defined by number of objects, since objects have a broad range of complexity and various types of objects affect performance in various ways. It can't be defined by file size, since a dramatic difference in file size can occur even in two files that have the same geometry related to how cleanly the resources in each file were managed.

For instance, a common issue we run into is a report of something like "Sheet Layers Are Slow" which can have a bunch of different causes. The core one I see most often being a user only using a single sheet layer, and then putting dozens or sometimes even hundreds of instances of a Title Block object on it, which will brings things to a crawl. Title Blocks are optimized so that they are only recalculated and loaded when a sheet is viewed, or right before its printed/exported. If ALL title blocks are on a single sheet layer, this optimization becomes worthless and you have to wait for all sheets to update in order to continue working once you switch to that single sheet layer.

This means that in order to define performance, I would also have to effectively dictate workflow, something we have not done before in most cases. The general rule in Vectorworks has long been "You can do almost everything 5 different ways, the correct one is the one that works best for you." and I really do love that. But making standardized performance indicators for Vectorworks runs exactly in opposition to this mindset in every way I've been able to come up with.

SOME parts of Vectorworks are easy to benchmark, such as rendering speed, which is why you have all likely seen those that I have posted. Those are much more cut and dry, as I can test the exact same scene across different hardware and the resulting rendering time is a direct relation to performance.

Things like duplicating arrays of objects, doing complex geometrical calculations etc, do not result in times that vary directly based on hardware performance, since a lot of the slowness in those operations is currently a Vectorworks software limitation and not the fault of your hardware. Until these processes are moved to multiple threads I don't think they will be benchmark-able in a meaningful way. (To clarify, I TRIED to benchmark them in a meaningful way, and got more variance in the completion time based on what other applications were open more so than what hardware I used. )

I would very much like to hear any suggestions or feedback if anyone can see an avenue to approach this that I have missed. I miss plenty. I will not be working on this for some time, but I wanted to go ahead and pop this discussion up and take responses while it was fresh in my mind. I would also like to hear the KINDS of performance indicators you all are interested in so that I can ponder on how best to provide them in a technically simple but accurate manner.

-

Do you mean 3D models of pinball/arcade cabinets that you would use IN a rendering?

I would suggest taking a look at 3Dwarehouse:

https://3dwarehouse.sketchup.com/search/?q=arcade cabinet&searchTab=model -

What version and service pack of Vectorworks? Reply back with the following from your machine as well and I can take a look:

-

1 hour ago, elepp said:

A pity it got removed, but will do for now. Thanks a lot for the quick replys.

It had to be removed from the Styles, otherwise if you wanted one slab transparent and another drawn normally, youd have to create a whole new style just for that purpose.-

2

2

-

-

37 minutes ago, MelanieR said:

As stated in my post, sheet layer performance issues occur on a variety of files, machines and versions. With every new VWX version this negative performance trend is more noticeable. Version 2018 and 2019 were just examples I mentioned. Why have you closed this topic for open discussion? Why do I even ask this?

Please reply back with the system profile I requested as well as the requested screenshot.

37 minutes ago, MelanieR said:Why have you closed this topic for open discussion? Why do I even ask this?

I did not, what made you think this? If theres a UI element on the forum that confused you, please let me know and I can try to clean it up.

-

2

2

-

-

This slowness only happened after installing it on the newer machine, or it was happening before and the newer machine was an attempt to speed things up? The files were fine in 2017 and only slow in 2018/2019? Could you please attach a screenshot of an entire normal sheet layer for one of your files?

Reply back with the following as well, please:-

1

1

-

-

Hrmm that isn't screen/layer redraw then. Make sure to have tech@vectorworks.net take a look in that case, might be some stuck geometry slowing you down unduly. 1800 isn't THAT many objects and shouldn't start crawling unless they each have hundreds or thousands of vertices each or something like that.

-

53 minutes ago, nahekul said:

We have noticed that Vectorworks slows down/freezes every time something new is drawn (eg. lines, squares, dimensions) on layers with a lot of objects (~1800 objects).

Moving and duplicating objects are okay, just not drawing anything new.

It happens with VW2019 SP2 only. Other versions seem to be fine.

We think this is because Vectorworks is trying to redraw the entire design layer.

If all the classes on the layer are hidden, then there are no issues with drawing anything.

Drawing objects inside a group without showing other objects outside the group is fine as well.

If there is a way to limit the redraw to the display area instead of the entire layer, then I think it should solve this issue.

We can narrow down where the issue is with a symptom like that: Do you get the same slowdowns with Tools > Options > Vectorworks Preferences > Display > Navigation Graphics set to both the top and bottom settings in a quick test? Or does one seem much slower than the other? -

5 hours ago, ConcourseDM said:

Over to you now Vectorworks Inc. Please help as this is really slowing down our workflow.

This issue was already submitted to tech@vectorworks.net directly, or to your distributor? The forum is mainly for user interaction, it is not a formal support channel. -

On 1/19/2019 at 11:05 AM, cberg said:

Who knew the split tool also works in 3d. Vectorworks is so strange.

I think of it as my Industrial Cutting Laser.-

2

2

-

-

NOTE: None of this is intended as any kind of excuse or reason for delay, I simply wanted to give insight into what's going on and how things look internally:

19 hours ago, twk said:on the other hand, surley Teamviewer sessions with Vectorworks Tech support would be much faster/cheaper/easier then bug submissions/phone calls/site visits?

I should probably wishlist this..

Tech now uses teamviewer daily for exactly this reason, it works excellently.

21 hours ago, Michael H. said:New versions of VW can be frustrating, I feel your pain. I now follow these self-guided rules which seems to work well for me.

- If I start a project in a specific VW version, finish it in that version.

- Don't migrate to a new version of VW until at least SP2 or SP3. Just because a new version has been released doesn't mean I have to jump on the bandwagon immediately.

- Spend some time to play around with any new version to learn features, see what works or doesn't before taking the leap. I tend to move to a new version about 6 months after the initial release.

- Every now and then I re-do my templates from scratch.

I'm now on VW2019 and it's been plain sailing so far. Hope your issues get resolved.

This is an exceedingly good list of practices. My goal is to make many of them a distant memory however 😉

1 hour ago, line-weight said:Maybe this is the only way to get a message to the people higher up at VW?

The message is there, it's just going to take time for the change to get all the way through the pipe. I mean you can certainly do this if you feel it's merited, I don't want anyone paying us for something that doesn't do what they need, but from what I can see that would not make it happen faster.

20 hours ago, bcd said:Sounds like a site visit from a VW tech-rep might in order. It could be that visiting a practice where these issues arise would be the best use of everyone's time and resources and the lessons learned could be disseminated to the users for immediate implementation and internally @ VW for QA.

We agree, this is something we are doing more and more often, however it wouldn't be the techs that would go normally. Tech support normally handles issues like installation and driver compatibility and configuration and "basic" usage questions, but they don't have the resources to expand into even moderately complex workflows (Project sharing outside of standard advised practices, interoperability with other packages, working with references that rely on other origin systems, etc) so a lot of that falls on what we have here called Product Specialists.

However, these two teams are in completely different departments because they developed independently. We identified this as a problem a ways back and have been working since then to get the two disparate groups more meshed together to develop complex workflows (I use the term "complex" to indicate merely more than explaining wall component heights for instance, its relative.) and create more standardized training content and whitepapers on how we at least EXPECT users to work with the various combinations of tools.

I can not open my mouth about the specific things I want to open my mouth about at the moment, I'm in muzzle mode for a pretty damn cool project coming up, but I wanted to deliver as much of an answer as possible at the present time.

-

2

2

-

Deform tool would work, Split tool may also be what you're looking for from an isometric view:

-

2

2

-

-

Thank you, that verifies that the issue is likely not one of the VGM-related problems we are currently tracking. Just helps me in my filing.

-

2

2

-

-

11 minutes ago, Andy Broomell said:

So this problem is so bad in my 2019 file that I literally cannot do my job. 😣

Thats the one I saw before too!

If you could please: The very next time that issue happens, leave that editing mode open and then go to Tools > Options > Vectorworks PReferences > Display and change the Navigation Graphics setting to the opposite of what it currently is, either Best Performance or Best Compatibility (not the middle one, I mean) and tell me if the geometry snap back into place or if it shows the same weird broken offset. Thank you. -

15 minutes ago, ericjhberg said:

I reiterate my above concern about origins...if you're asking us to change the way we do things, then please offer a best practice that accounts for all of the intricacies of the coordination issues we face. Don't just tell me that the origin is the problem, fix it.

That explains the support issue then. I was worried they just hadn't replied or something, so that's sorted. Tech support reps are mechanics, they can often fix problems, but they dont have the resources to be able to teach users "how to drive".

In your case, it isn't just simply "This is being done wrong" because you're in a situation that's becoming more and more common, complex interaction between multiple shops with more data-sensitive files then they had before. This kind of thing is definitely what our product specialists are here for and I'm going to try and loop them in to see if they can get (or already have) best practices for this established.

Now, that's of course not ALL you're running into, you're hitting graphical limitations as well because of file complexity, which at the moment has no cure other than "The file must be less complex." which is no good. There is a significant development in the pipeline currently that will do worlds for this, but until I know more details about WHEN we will see it, I'll keep pushing the regular avenues to get a workaround.-

1

1

-

VW2018 SP5: Sheet layer redraws are STILL a mess

in Troubleshooting

Posted

It is incredibly unlikely that 2018 will receive another patch ever again.