Daniel B. Chapman

Member-

Posts

68 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Articles

Marionette

Store

Everything posted by Daniel B. Chapman

-

Is there a way to name the respective parts of the MVR output based on the symbol name--or any other method--when a symbol is exported? I'm exporting a set of geometry that is all named "Scenic Piece A" etc... and structured as a group of symbols but when it comes into Vision it is just a group of Geometry. I realize this is probably a bit of an edge case request, but it would make tracking things in the tree a lot easier. I'd love a workflow to quickly update and organize the meshes other than breaking it into a bunch of separate exports. I'm assigning an emissive mesh to all of these elements that are patched so tracking it would be incredibly helpful.

-

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Thanks! I was able to get this working. I'll submit some more questions once I have this further along: -

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Ok, can someone please explain to me what I'm doing wrong here? I created a basic 3D polygon, assigned to to Universe 1.1 the name of the (NDI is actually SyphonNDI). I reset my Vision Video sources and...nothing. The back of the texture is also blank. Quick Edit: all my other NDI tools on this workstation are fine. I'm actually previzing this project in Capture right now... -

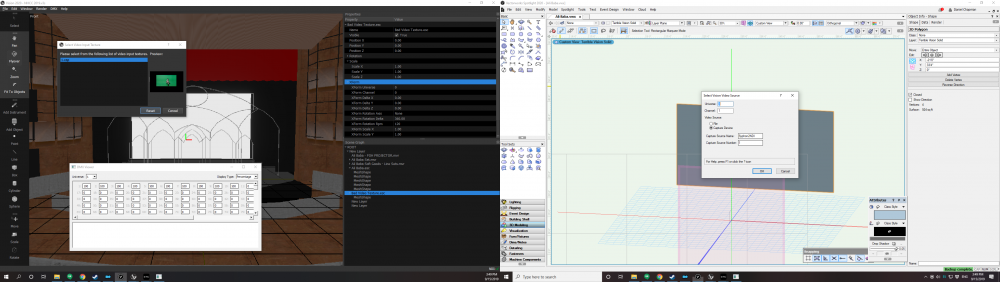

Vision Functional Projector

Daniel B. Chapman posted a question in Wishlist - Feature and Content Requests

Vision should support attaching a video source to a projector and projecting that Video Source. This seems obvious, but this disqualifying Vision as a previsualization tool for 80% of my productions. -

Ouch... this should be a priority request. I can't simulate any projection maps with simple geometry for live theater/opera. Part of the previsualization workflow is to examine the shadows cast in various venues to explain the problems to the rest of the design team so the smart fiscal decisions can be made. Is there an ETA on this? I'm effectively sidelined on Vision for what is looking like another year. And not to harp on this, this has been an issue since 2016 Video and Lighting can not easily be separated in client renderings and not all video applies to a surface. I'm often splitting geometry, using the projector as a light, mapping it to moving scenery, all sort of use-cases. I can't stress how important this is as a feature. Video walls are cool and all, but they are easy to fake a render on, even in Blender. I was really hoping to use Vision for this season.

-

I can't for the life of me figure out how to assign a video source to a simple projector. I don't have a screen object (nor do I want one in this model) I'd like to just take a "generic" 20K with a 1.3-1.9 zoom and pipe some video into it. When I export this I don't see an option to select a source and Spotlight -> Select Vision Video Source doesn't work for this. (I've spent the last hour searching the manual) Am I missing something here? How do I get video to come out of a projector?

-

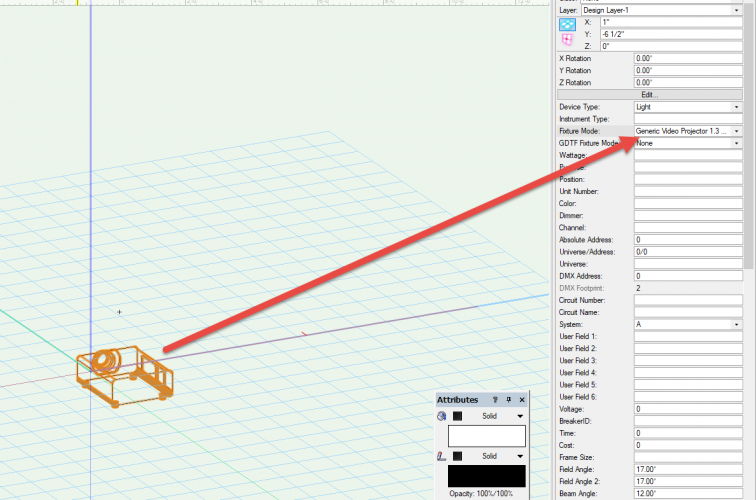

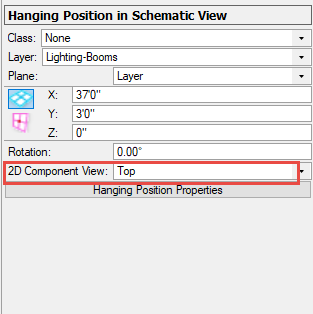

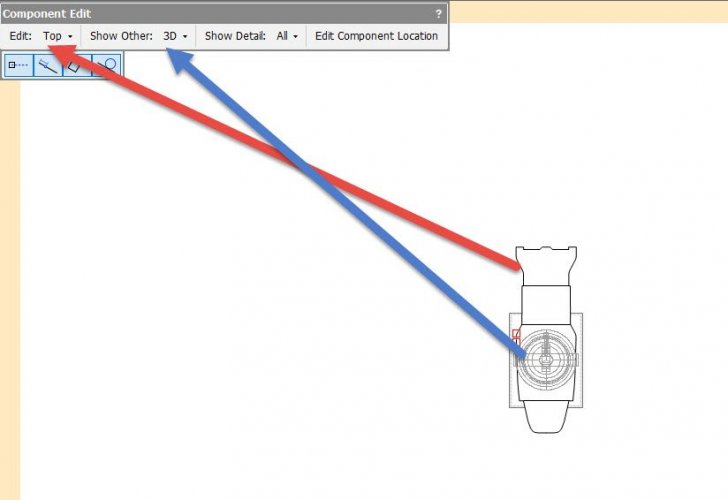

Schematic View 3D Rotating (Booms)

Daniel B. Chapman replied to Daniel B. Chapman's topic in Entertainment

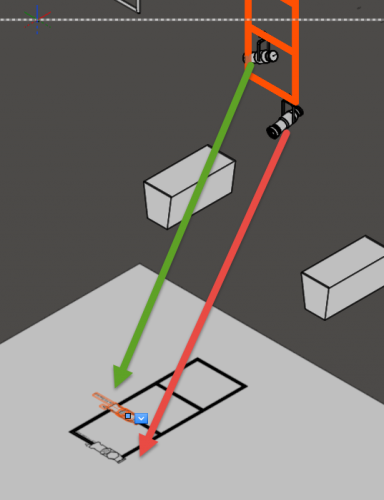

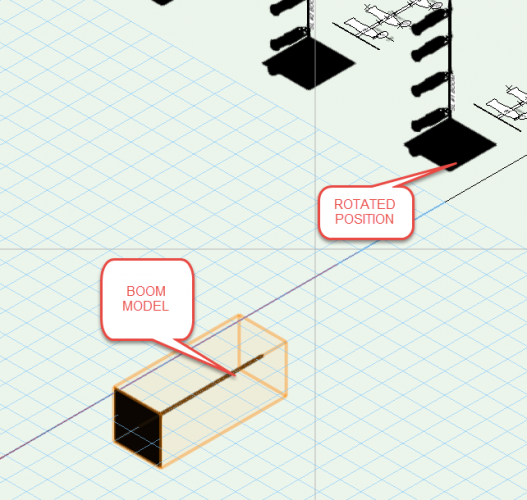

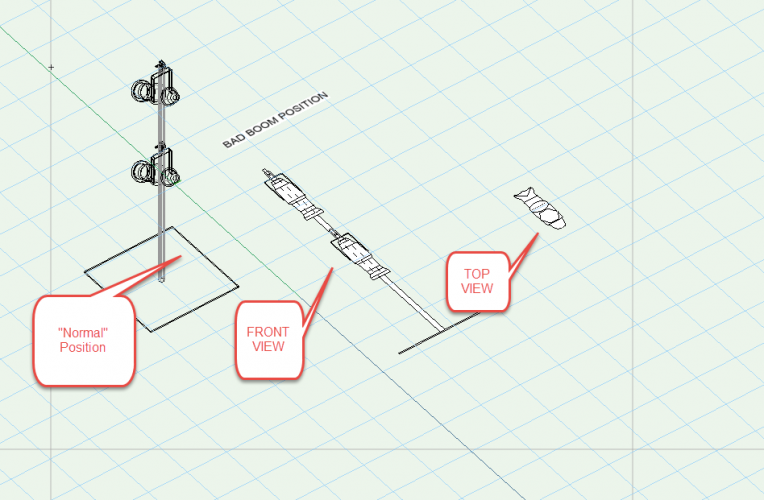

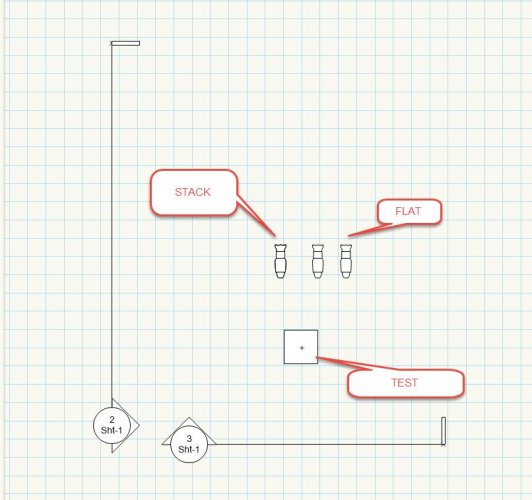

Ok, I've been playing around more with this and here's another example where we need more functionality to get the schematics to lay out correctly: Here's an example of my "ladder" position with a light focused upstage and a light focused as side light. As you can see the plan view doesn't take into account the rotation against the normal (Y) axis. I would love the ability to set the orientation of the top view of the symbol. I'm sure I can "make this work" by rotating the position but this assumes all my lights are hung the same way in all positions. We really need to be able to control a few more variables in the schematic view. Similarly this doesn't show an accurate depiction of the 3D model in other views: To correct this I'm forced to rotate my hanging position base symbol into the orientation I want the lights drawn and then re-rotate it into the actual model space to get it to lay out. For the most part I'm able to work around this to get the paperwork to layout properly, but I can envision a situation where I'm going to need to draw the units differently and I need a finer grain of control. Something like "use layer plane rotation" rather than whatever the model is laying out in. -

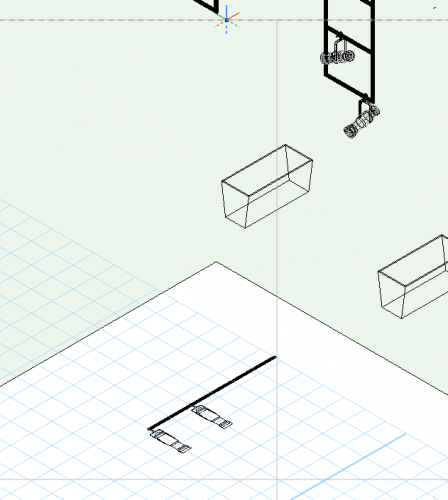

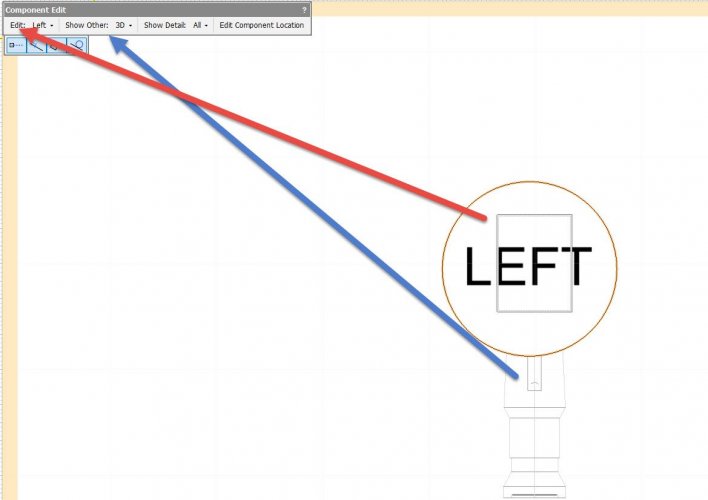

I'm was thrilled to see the schematic views as a new feature as the model viewports were next to useless when you needed accuracy in a model. (I actually opted to maintain a 3D file and a 2D file as it saved me time). I cannot stress how much I would like this feature to work for theatrical designers. I spent the better part of the morning trying to make this work and I stumbled upon the fact that I needed to create the lighting position perpendicular to the plan and then rotate it up. If I built this as I normally would in 3D (with a boom created as a boom would normally stand) I can't use label legends: Obviously there is a workaround (create the position and rotate it) as I have done here, but this seems like an oversight. Is there a way to display the "2D Component View" AND the view of the schematic so we can control this behavior more accurately? It seems to me that I'm nearly ALWAYS going to want to display the 2D component view in "top" where my label legends will be legible but I might want to LOOK AT THE SCHEMATIC from the FRONT/LEFT/RIGHT etc... Is there any more insight I can get into this workflow? It is an obvious improvement but I'm going to be forced to rotate all my 3D components 90 degrees to make it work.

-

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Just a healthy bump on this topic. Is NDI coming anytime in the near future? I'm ramping up two operas and I'd like to give Vision another shot. -

Wishlist - Font Override Folder

Daniel B. Chapman posted a question in Wishlist - Feature and Content Requests

It would be wonderful to have a font folder inside Vectorworks where we could override the system fonts. I've had a lot of issues with read/write access in Windows 10 b 18362 where I had to install the fonts globally for them to work in Vectorworks. Given the fact that I now maintain the fonts for cloud publishing it would be nice to just include the fonts as: %VECTORWORKS%/Fonts/* in addition to the system search path. -

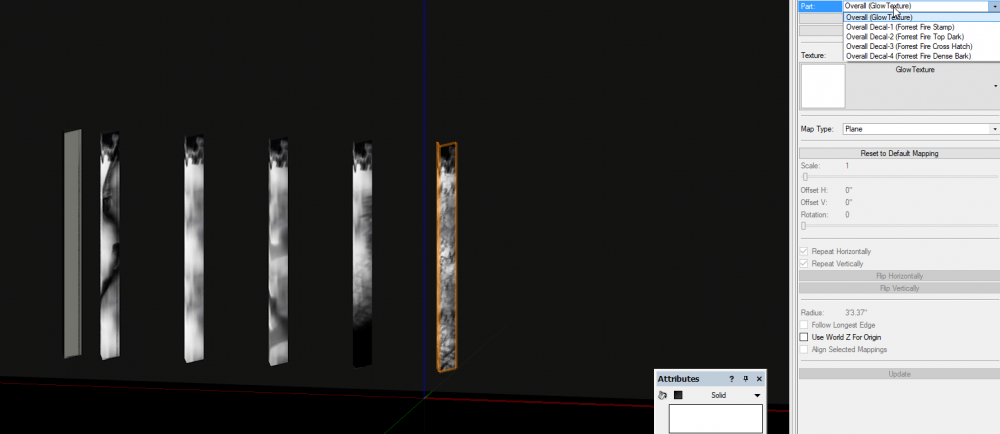

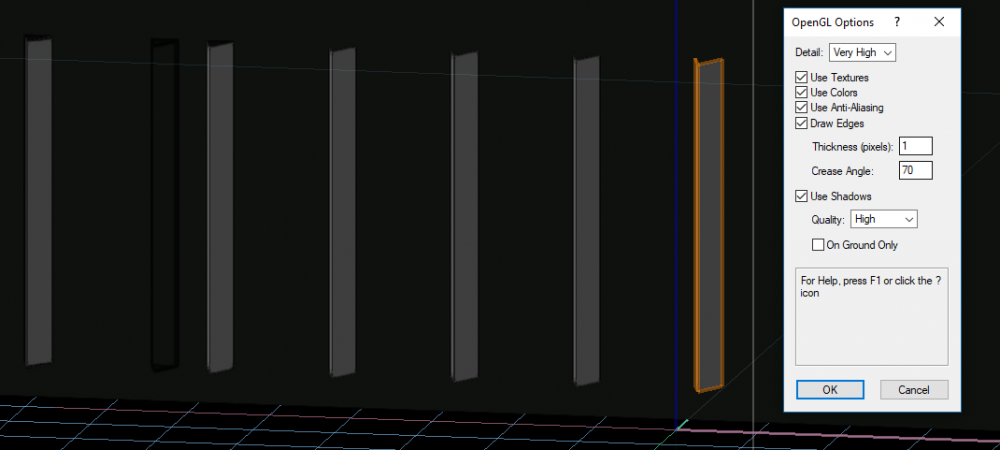

I'm trying to render some paints over a glowing texture (basically simulating paint on a frosted surface that has back-light on it) and it is working in Renderworks. However, when I view the same objects in OpenGL I end up with a blank surface per my second image. Is there something I'm doing wrong? Decals seem like the right approach for this workflow and I would love to be able to set them in OpenGL rather than swapping to Fast Renderworks to set position as it is, for obvious reasons, a faster workflow. Any help would be appreciated.

-

Thanks Joshua, (I quite enjoy your lineset tool.) I realize there's a lot of manipulation behind the scenes for the lighting instruments, but I feel like most of that complexity exists to support the model-view workflow. The core framework finally has the ability to project a 2D drawing in 3D space other than top plan and it seems like this would be an a great time to move from "2D moved to 3D with some tricks" and just work off a 3D model. More to the point: I think (just like you're suggesting with your tool) that most designers either worksheet and move or use an external tool (I favor Capture right now). It would just be nice to have a 3D plot that wasn't a hot mess of design-layer viewports. My workflow is actually just doing worksheets in place with templates as if I was working off pend an paper as it is actually faster than trying to drop in the focus points and move the lights when I'm done. I know I'm not alone in wishing I could actually work in 3D all the time rather than faking it. Given the circumstances here it might be time to reinvent the wheel in Spotlight. Model-Viewports have always felt like a bad workaround. It seems like this is closer to allowing that to happen.

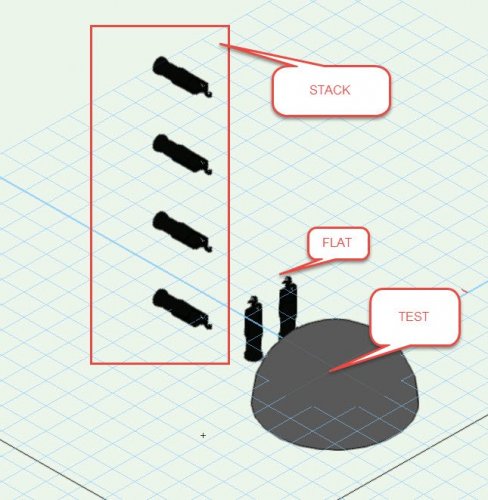

-

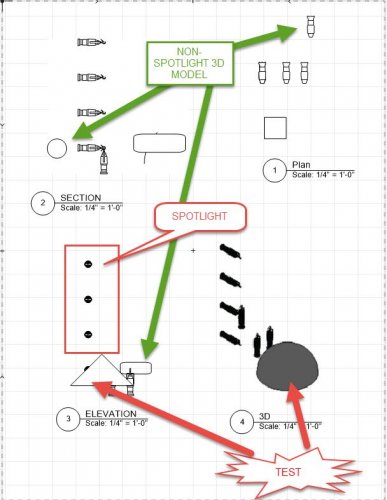

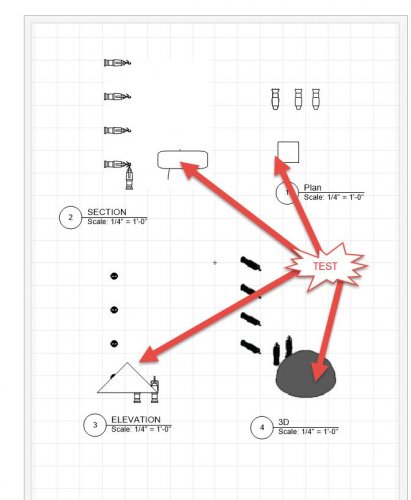

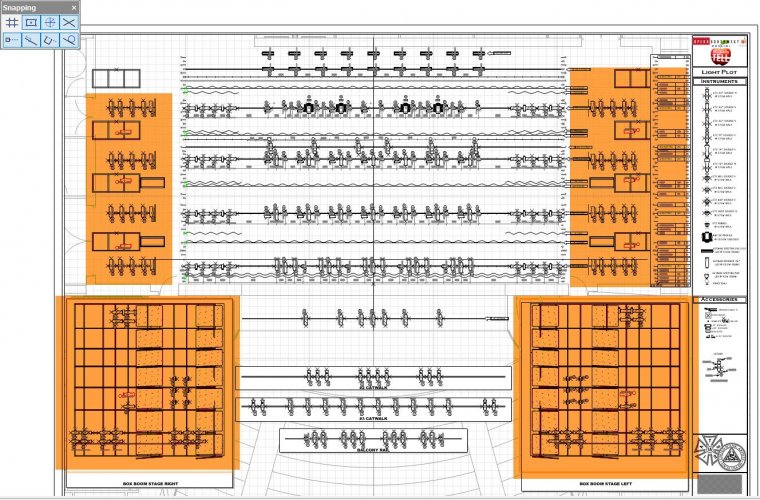

I was really excited to see a feature that's been on the wish list since I've been drawing booms (every light plot for twenty years) and to my dismay it looks like this feature works for everything BUT Spotlight. I'm hoping I'm doing something wrong (I'm confident I'm not) because this is a huge missed opportunity to actually allow us to model in 3D and display the information we need as designers without resorting to tricks, model-layers, and increased complexity. I've taken a default ETC Source 4 36 and dropped it into the drawing. You can see in the images (and the file I attached) that this works for all 3D Symbols unless it is a Spotlight instrument. I was hoping I could drop my label legends in and view my booms in their real location in 3D rather than creating a separate model space. This is, bar none, my biggest complaint about the way Vectorworks models light plots. We have a great array of 3D tools, but if we want to make a document an electrician is used to reading we need to print that in 2D with legends. The 3D label legends start to bridge that gap, but projecting a 2D symbol in a non top/plan viewport would be a game changer for theatrical light plots. Vertical or off-axis lighting positions have always been problematic. It would be nice to draw them in position so I can export them to Vision/whatever directly and use the 3D tool-suite without resorting to tricks. I attached one last drawing highlighting a simple rep plot. The areas in orange are the lights that are drawn "outside" the actual 3D model. It is ~ 30% of the plot and this has a lot of redundancy overhead. That's 30% of my plot that I can't easily use Vectorworks to calculate photometrics, focus, beams. This isn't a great workflow and it looks like there's finally core support for fixing the problem rather than the plot and model views (which are not user friendly and are painful to maintain). It would be nice to see you take the next step and allow Spotlight objects to project in 2D. Test File.vwx

-

Vision, better performance on MAC or PC

Daniel B. Chapman replied to Gaspar Potocnik's topic in Vision and Previsualization

Gaspar, I would push for a technical answer from the Vision team. I know for a fact if they're routing through CoreVideo (I don't think they are) the eGPU option via Thunderbolt isn't an option as the MacOS won't use the hardware. It should, however, use the OpenGL hardware. In my tests QLab didn't utilize the external hardware (CoreVideo) and Processing did (OpenGL). That was using a Mac Mini 2014 as a test-case which was pretty solid. For whatever it is worth installing the external hardware in an enclosure was a bit of work and less reliable than I liked. My experience with Qt (which is what Vision and Vectorworks are built on) is that it runs very well on Windows, Linux, or Mac so you're probably hardware bound. My recent experience leads me to believe that building a simple PC with a high-end graphics card is less expensive than trying to integrate the external enclosure. You're looking at ~ $200 base for the enclosure which is very similar to the cost of a motherboard and CPU for a PC. That being said, if you're using that card to power other things on your Mac it might be worth it. Best of luck! -

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Mark, I'm actively using another product for previsualization right now and the NDI workflow is extremely easy. I'm using Open Broadcast Studio to screen-capture media server output and send it over the network so there's actually no hardware in this pipeline. NDI has solid support in Open Frameworks and several capture cards (Blackmagic) have utilities for reading directly into a stream. It is easier than dealing with HDCP issues between devices and is simple to use. I'm able to easily stream two SVGA resolution outputs over wifi with no hardware. If you opt to not support it at a minimum it would be nice to see support for Windows Media Foundation compatible cards and AV Foundation compatible cards on MacOS. If you supported the core frameworks I suspect we would all have a much easier time pulling in video. -

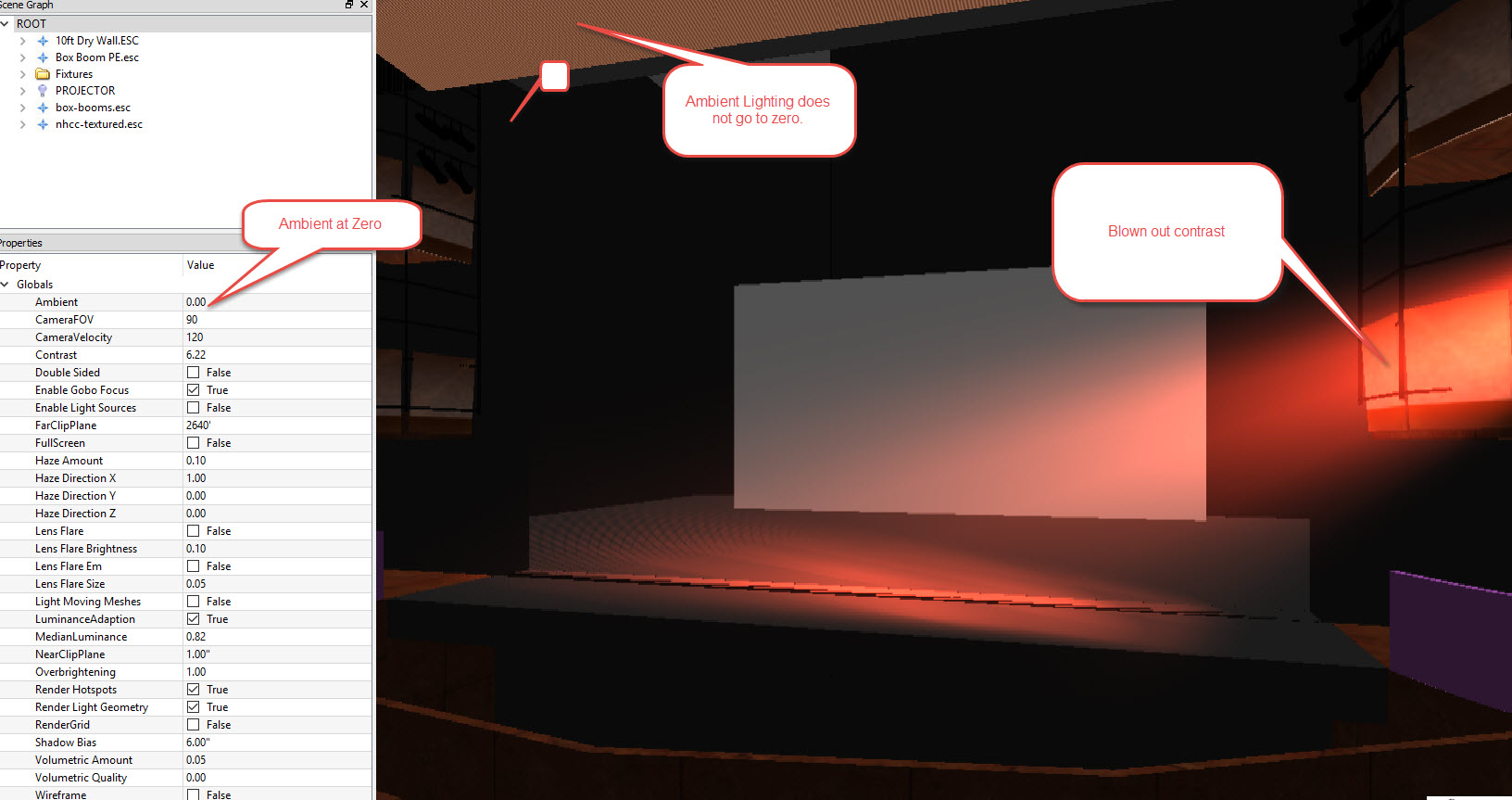

Thanks Edward, On my Windows 10 box there's no change for video, I might make it to my studio to fire up the Mac Pro and see if there's any difference. I'll be using the demo as I'm officially "expired" until this bug is fixed but I'm happy to test builds as they come out. I'll reinstall the capture card today and take another look sometime this weekend. I've messed around with the median luminescence ('M' and 'N' are the hotkeys if I remember right) and I can't really get a black level. I end up dropping the contrast to make it reasonable but you end up with that weird blown out color as a result. I'm happy to hear video is on the list. As a programmer who does a lot of 3D and 2D graphics I bet it isn't that big an issue once you get a solid test-box together. I really, really, really recommend you guys use a clean box for testing. As I let you know my ancient backup computer does still show Spout/Virtual Cams in Windows 10x64 and I bet it is either the age of the hardware or something funky since that was upgraded from Windows 7. Also, don't forget about my other post where I show the green/magenta bug in the Mac webcam. I think that's probably a solid clue.

-

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Mark, I think the general problem with the way Vision is attempting to handle video is that you are not providing solid hardware support and the only reliable support seems to be CITP which is pretty similar but far more complex to implement for most applications because it manages a lot more than video. NDI's SDK is here. I have not personally coded up an implementation (though I'm probably adding it to my media servers after this opera). It is really just a UDP video stream that you can tap into. https://www.newtek.com/ndi/sdk/ Essentially the pipeline is just: [NDI Stream Name]::[Frame #][some headers with sizing][pixels] Since that's pushed over IP it is available on local host or on your network. It has a couple little preview image things that get pushed but in general you just pick a name and then decode it on whatever device you want. Here's a link to two utilities I use because I'm using OpenGL calls most of the time: Windows I just take a Spout broadcast and throw it over the network http://spout.zeal.co/download-spout-to-ndi/ On Mac I run http://techlife.sg/TCPSyphon/ which is basically the same thing. This takes the video hardware entirely out of the chain because you're taking a texture and mapping that to a video stream that is decoded on the other end. It really is pretty simple, especially if you are (and I assume you are) just taking a texture and mapping that to your light source for video. As to your concerns about converters: yes, that's a problem but it is significantly less of a problem than trying to support all the native capture cards out there (and it is clear your native hooks do not work at the moment). The IP solution bypasses this because there are a variety of utilities to get the format into NDI. There's a "pro version" here for Blackmagic cards: https://itunes.apple.com/us/app/ndi-source/id1097524095?ls=1&mt=12 which makes some pretty cheap USB3/Thunderbolt hardware available or you. Frankly, I'd rather see Syphon/Spout connectivity, but I operate at a pretty high technical level for video/projection design as I write my own software for most of it. I think having some simple connectors available would be a very quick way to get around this problem. I suspect NDI is going to be a standard that sticks around for quite a while. I first used it on a major corporate event and I'm seeing it everywhere now. At the end of the day I don't actually care how I get a video stream into Vision I just need to be able to get a video stream into Vision. NDI makes multiple inputs a lot easier and in many cases you can completely bypass a capture card with software. That being said, running a HDMI cable out of a Mac into the back of my PC wouldn't really bother me if it meant I could render projection mapping effects easily for a director. In many ways I think supporting Blackmagic devices would be a good choice as they are relatively low cost (Intensity Shuttle) and the SDK is available for Windows/Mac so you don't have to do the hard work or depend on the operating system. At the end of the day I just want to get a video source into Vision. 2.3 just doesn't cut it for professional renderings (and I did make that work with an AverMedia PCIe card). -

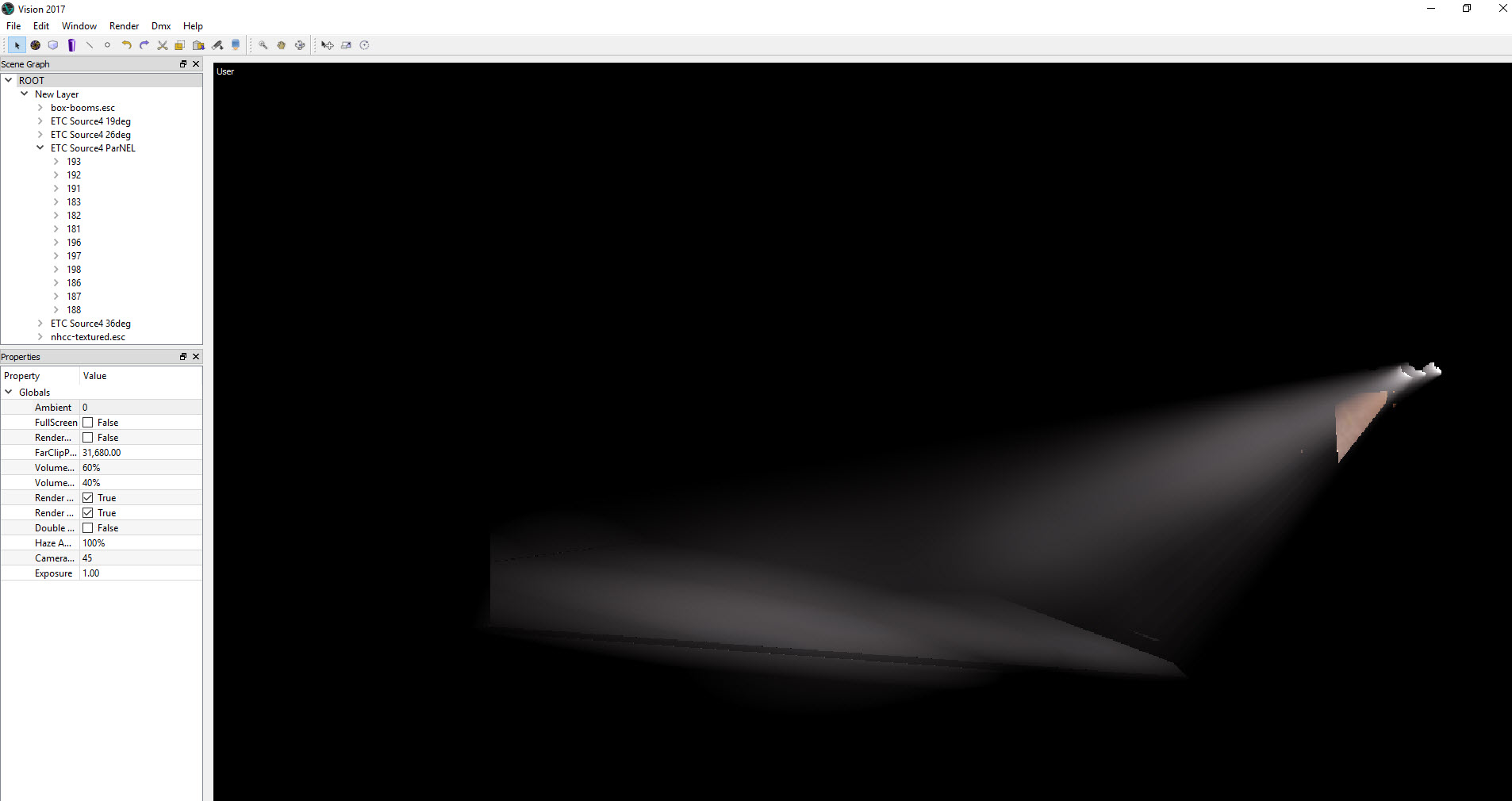

Dominic, As of this point, no, I don't think it is fixed. If you get it working I would absolutely love to hear what your system is so I can track down what's happening on mine. I just tried 2018 on a Windows 10 and had the same, result. I've had some luck with Vision 2.3 and an Aver Media card but the rendering quality just isn't there for my clients. I'm being a pest here on the forums in the hopes that this will elevate the issue to "needs to be fixed" rather than "backlogged". Here's the card I'm using with Vision 2.3: https://www.amazon.com/AVerMedia-Definition-Composite-Component-C027/dp/B002SQE1O0/ref=sr_1_1?ie=UTF8&qid=1505419715&sr=8-1&keywords=avermedia+capture+card+pcie Edward has been very helpful with trying to make this work but I think the bottleneck is on the developer side of this problem. It is frustrating because Vision is definitely the easiest workflow right now but 2.3 definitely has issues with black-levels and ambient lighting to get something "dark" working. I've attached a screenshot so you know what you're getting into. These are the same models (and no, I didn't pick that ugly orange color, that's an R02 I think). As a designer trying to figure out if my shots work or doing focus points 2.3 is fantastic. If I'm trying to show this to a client it is a no-go. If this is a road block for you I'd encourage you to contact support. I think I'm the only one bugging them on this issue so it isn't a priority. Best of luck! (The dark one is Vision 2017, the lighter one is 2.3 with 0 ambient lighting)

-

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

** by "not plug anything into" I mean I literally can not purchase a capture card that will work with Vision 2017/2018 on Windows 10 or Mac OS and render video inside Vision. This isn't a case of me trying to skirt a hardware requirement. I would happily pay $300 to get this working right now and the answer is I can not. -

Feature Request: NDI Support

Daniel B. Chapman replied to Daniel B. Chapman's topic in Vision and Previsualization

Mark, As a developer myself I can understand the hurdles. I'd also like to point out that video input has been broken in Vision 4, Vision 2017, and Vision 2018 with some, but not all, capture cards working in Vision 2.3. I have (4) computers that I can test against (a surface, a MacBook Pro 2016 Touch, a MacBook Pro 2010, and a box I built myself running windows 10 that's nothing fancy) and none of these have working video input. Mac OS ends up the closest with some bizarre pixelated green/magenta image of my webcam. Considering the fact video is broken in Vision as it stands you guys might want to explore "universal" connectors like NDI as it seems your ability to support hardware is hampered at best. I could be wrong and a video patch is on the way but I downloaded the Vision 2018 demo today and I get the same broken interface with no available inputs for video projection. While I'm on the topic it would be nice to see you integrate: * Blackmagic inputs (the SDK is pretty well documented) https://www.blackmagicdesign.com/support/ Why? Because they are solid affordable capture cards that run on Mac and Windows * Syphon inputs on Mac (again, very well documented software and a simple texture-share given the fact you're rendering in OpenGL this is borderline trivial) http://syphon.v002.info/ Why? Because it is native to Mac OS and used in the VJ pipeline by basically every media server. This bypasses the hardware issue entirely so it seems like an obvious choice. * Spout inputs on Windows (which is basically Syphon for Windows) http://spout.zeal.co/ Why? Because it is Syphon for Windows. It bypasses the hardware chain entirely and is a simple shared texture. * Support for Virtual Webcams in general which would render all the above moot as you could use Syphon or Spout to make virtual inputs OR something like NDI which has converters for Blackmagic, Syphon, Spout, Capture Cards, Web Cams, Other media servers and CITP protocols. Since this runs of TCP/IP it also renders the hardware question moot. As a software company the phrase "renders the hardware question moot" should be of interest to you. I've been fairly patient on this issue but I'm currently evaluating Capture Nexum and Realizzer for purchase in my next project because Vision is broken for video. I've already suspended my subscription until this issue is fixed. And I suspect that will mean I'm running Vision 2017 for a very long time at this rate. "Which media servers are using this protocol I haven't looked at them in a while so I'm a bit behind the times." This isn't really a valid question at this point given the state of projection design. Not everyone is running a Hippotizer or a D3 on gigs. Many of us use Watchout, QLab, Resolume, or a variety of other pipelines (Touch Designer/Isodora) in our work at the regional level for theater and dance and the inability to plug anything into Vision is extraordinarily frustrating. -

I've been recently using NDI in my previz workflow to pass streams from machine to machine and it is definitely easier than any of the other solutions I've worked with recently. Can I request that we get this into Vision to replace the clunky video capture problems? https://www.newtek.com/ndi/ Here's a link to the open frameworks plugin which gives some idea of how easy this actually is: https://github.com/leadedge/ofxNDI I think the receiver code (video source) is less than 600 lines of code interfacing with the SDK

-

In 2017 I'm unable to sort the scene graph to arrange the fixtures in a way that makes sense. Am I missing something? Is there a better way to select fixture to focus them (I have nearly 250 conventional fixtures on this model).

-

I'm going to reference this long standing thread as the baseline for this post: I have a simple project requirement: 1) Run QLab or some other media server on a computer 2) Pipe that media server into Vision (PC) as a Video Input/Camera Input to be visualized for the design team so I can integrate video and lighting in a video rendering. Vision theoretically supports video capture cards but we don't have a list of approved devices. I don't particularly care if this is Vision 2 32-bit, Vision 2 64bit, Vision 4, or Vision 2017, I just need this to work for this (simple) project. Has anyone had luck getting camera to work in the last couple years? I'm open to any suggestion (including piping it in via OpenFrameworks or Processing if I have to).

-

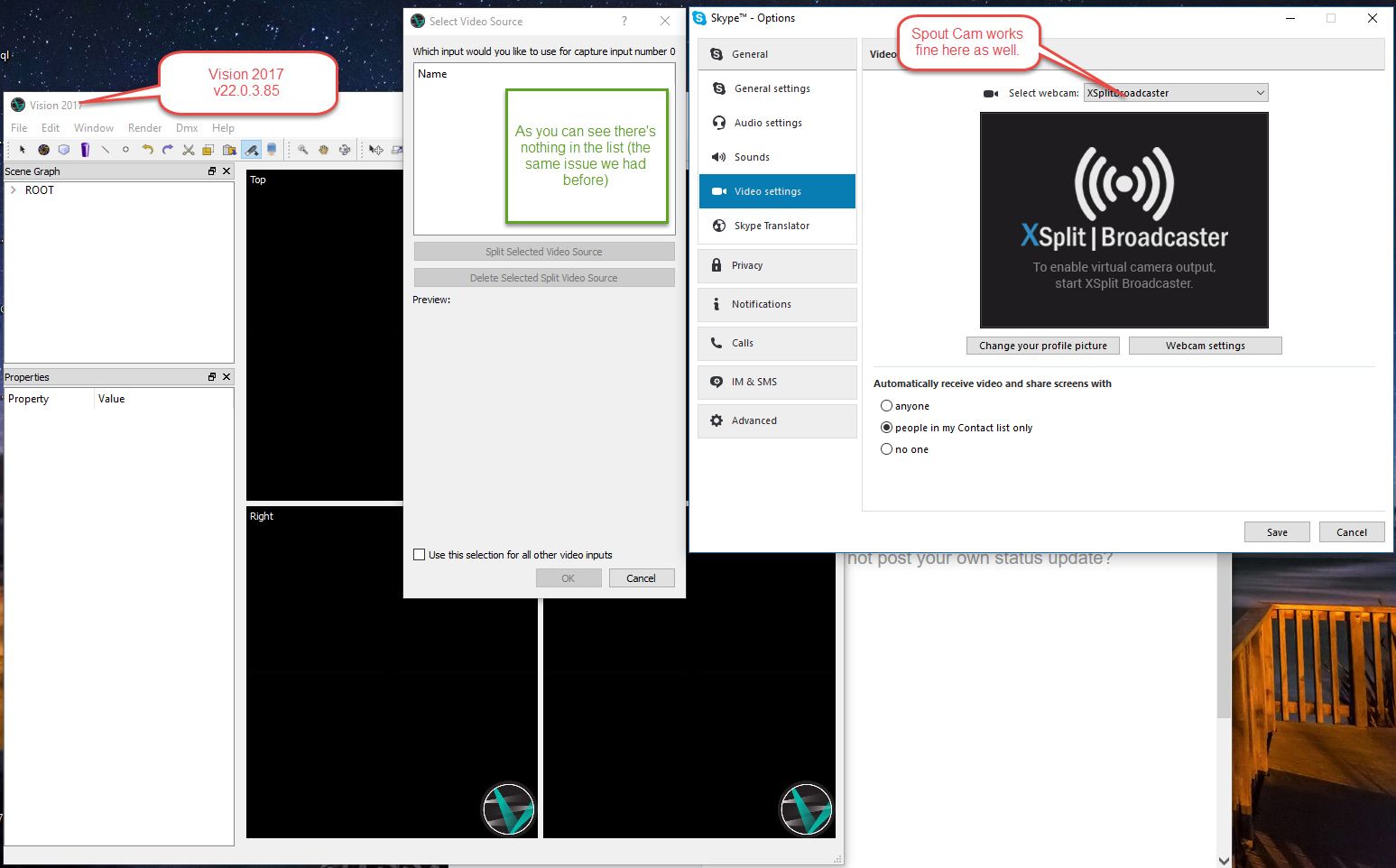

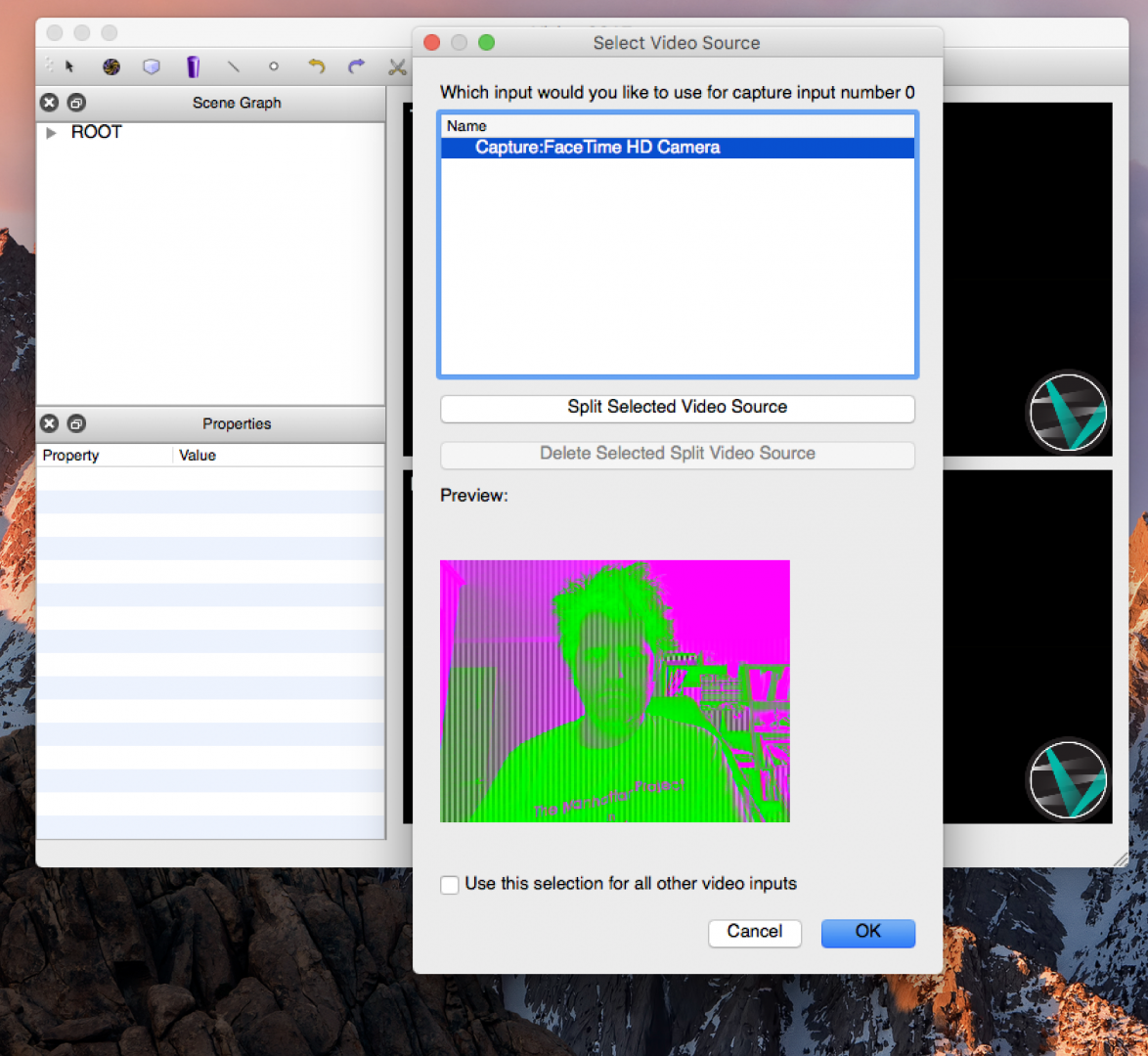

Kevin, I can't stress how silly this is right now. Virtual Cameras (not Spout/Syphon) (which are cameras to the operating system) should be a fairly trivial to get working and would allow me--and I suspect others--to do 90% of what we need to do for single-source designs. By way of continuing the discussion here's where we are with basic hardware on the operating system (photos to follow): As you can see with Windows none of the streams are detected. I made of point of using XSplit broadcaster rather than spout to prove a point. Skype is happily running in the corner an detects this as a webcam (I should note that Vision detected Spout in the past using this method). We have a little better luck here with OSX as the FaceTime camera is finally listed but you can see the quality of that image is useless--and rather interesting. I would think that the built in hardware would qualify as the basic check and it isn't functional. If we have a piece of hardware that works in a test environment can we list it? I'd like to get this working ASAP and I'm fine with quirks. Lighting Designs often incorporate video at this point in time and I would like to use Vision to visualize my designs. If that isn't/can't happen then I need to find a new tool that isn't projecting low-resolution video at a cardboard model in my basement. (For whatever it is worth I was expecting the OSX to fail, my other Macbook Pro (2010) didn't list the camera the last time I tried nor did the 2012 iMac at the office. This is on a new Macbook Pro (2016/Touch) so maybe there was better luck on the driver.)